1 Linear Regressions

Definition 1.1 A linear regression model is of the form: \[\begin{equation} y_i = \boldsymbol\beta'\mathbf{x}_{i} + \varepsilon_i,\tag{1.1} \end{equation}\] where \(\mathbf{x}_{i}=[x_{i,1},\dots,x_{i,K}]'\) is a vector of dimension \(K \times 1\).

For entity \(i\), the \(x_{i,k}\)’s, for \(k \in \{1,\dots,K\}\), are explanatory variables, regressors, or covariates. The variable of interest, \(y_i\), is often called dependent variable, or regressand. The last term of the specification, namely \(\varepsilon_i\), is called error, or disturbance.

The researcher is usually interested in the components of vector \(\boldsymbol\beta\), denoted by \(\beta_k\), \(k \in \{1,\dots,K\}\). She usually aims at estimating these coefficients based on observations of \(\{y_i,\mathbf{x}_{i}\}\), \(i \in \{1,\dots,n\}\), which constitutes a sample. In the following, we will denote the sample length by \(n\).

To have an intercept in the specification (1.1), one has to set \(x_{i,1}=1\) for all \(i\); \(\beta_1\) then corresponds to the intercept.

1.1 Hypotheses

In this section, we introduce different assumptions regarding the covariates and/or the errors. The properties of the estimators used by the researcher depend on which of these assumptions are satisfied.

Hypothesis 1.1 (Full rank) There is no exact linear relationship among the independent variables (the \(x_{i,k}\)’s, for a given \(i \in \{1,\dots,n\}\)).

Intuitively, when Hypothesis 1.1 is satisfied, then the estimation of the model parameters is unfeasible since, for any value of \(\boldsymbol\beta\), some changes in the explanatory variables will be exactly compensated by other changes in another set of explanatory variables, preventing the identification of these effects.

Let us denote by \(\mathbf{X}\) the matrix containing all explanatory variables, of dimension \(n \times K\). (That is, row \(i\) of \(\mathbf{X}\) is \(\mathbf{x}_i'\).) The following hypothesis concerns the relationship between the errors (gathered in \(\boldsymbol\varepsilon\), a \(n\)-dimensional vector) and the explanatory variables \(\mathbf{X}\):

Hypothesis 1.2 (Conditional mean-zero assumption) \[\begin{equation} \mathbb{E}(\boldsymbol\varepsilon|\mathbf{X}) = 0. \end{equation}\]

Hypothesis 1.2 has important implications:

Proposition 1.1 Under Hypothesis 1.2:

- \(\mathbb{E}(\varepsilon_{i})=0\);

- The \(x_{ij}\)’s and the \(\varepsilon_{i}\)’s are uncorrelated, i.e. \(\forall i,\,j \quad \mathbb{C}orr(x_{ij},\varepsilon_{i})=0\).

Proof. Let us prove (i) and (ii):

- By the law of iterated expectations: \[ \mathbb{E}(\boldsymbol\varepsilon)=\mathbb{E}(\mathbb{E}(\boldsymbol\varepsilon|\mathbf{X}))=\mathbb{E}(0)=0. \]

- \(\mathbb{E}(x_{ij}\varepsilon_i)=\mathbb{E}(\mathbb{E}(x_{ij}\varepsilon_i|\mathbf{X}))=\mathbb{E}(x_{ij}\underbrace{\mathbb{E}(\varepsilon_i|\mathbf{X})}_{=0})=0\).

The next two hypotheses (1.3 and 1.4) concern the stochastic properties of the errors \(\varepsilon_i\):

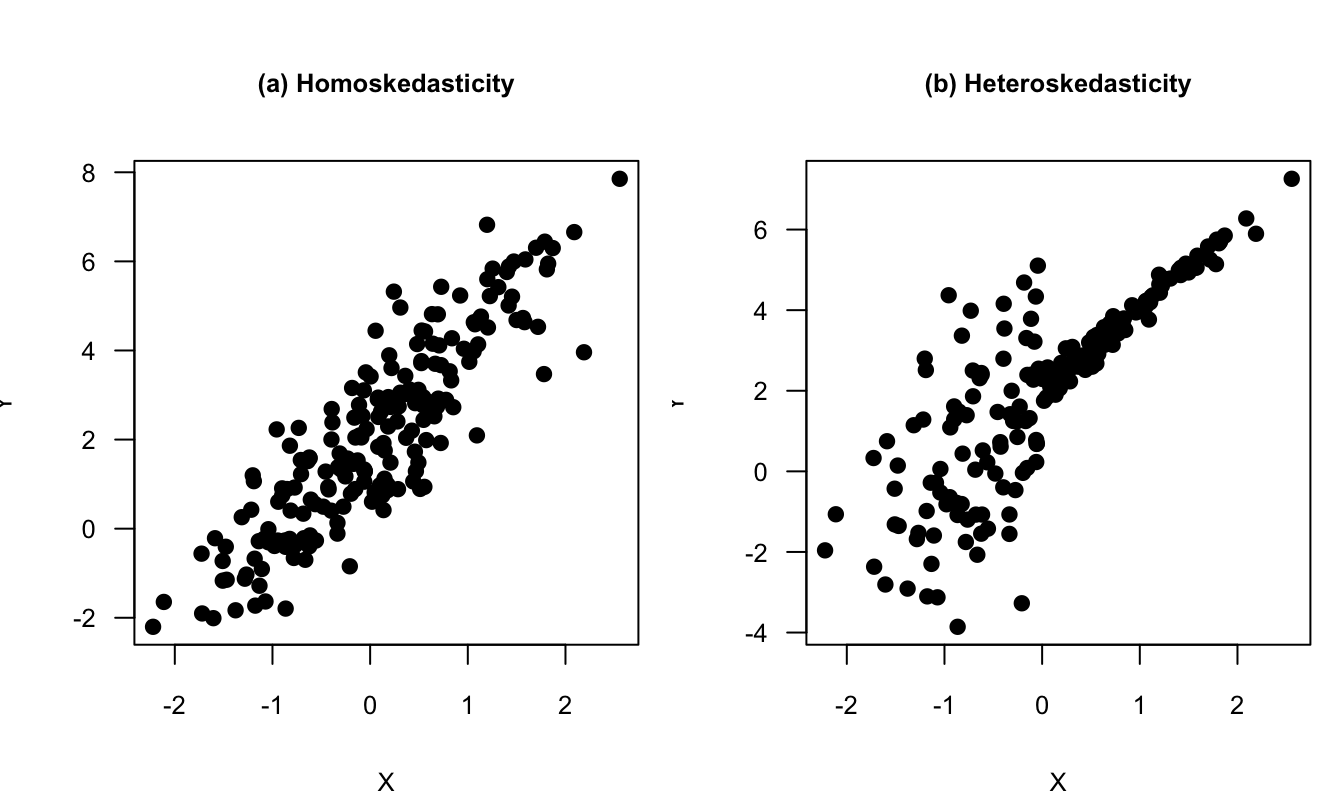

Hypothesis 1.3 (Homoskedasticity) \[ \forall i, \quad \mathbb{V}ar(\varepsilon_i|\mathbf{X}) = \sigma^2. \]

The following lines of code generate a figure comparing two situations: Panel (a) of Figure 1.1 corresponds to a situation of homoskedasticity, and Panel (b) corresponds to a situation of heteroskedasticity. Let us be more specific. In the two plots, we have \(X_i \sim \mathcal{N}(0,1)\) and \(\varepsilon^*_i \sim \mathcal{N}(0,1)\). In Panel (a) (homoskedasticity): \[ Y_i = 2 + 2X_i + \varepsilon^*_i. \] In Panel (b) (heteroskedasticity): \[ Y_i = 2 + 2X_i + \left(2\mathbb{I}_{\{X_i<0\}}+0.2\mathbb{I}_{\{X_i\ge0\}}\right)\varepsilon^*_i\].

N <- 200

X <- rnorm(N);eps <- rnorm(N)

par(mfrow=c(1,2),plt=c(.2,.95,.2,.8))

Y <- 2 + 2*X + eps

plot(X,Y,pch=19,main="(a) Homoskedasticity",

las=1,cex.lab=.8,cex.axis=.8,cex.main=.8,)

Y <- 2 + 2*X + eps*( (X<0)*2 + (X>=0)*.2 )

plot(X,Y,pch=19,main="(b) Heteroskedasticity",

las=1,cex.lab=.8,cex.axis=.8,cex.main=.8,)

Figure 1.1: Homoskedasticity vs heteroskedasticity. See text for the exact specifications.

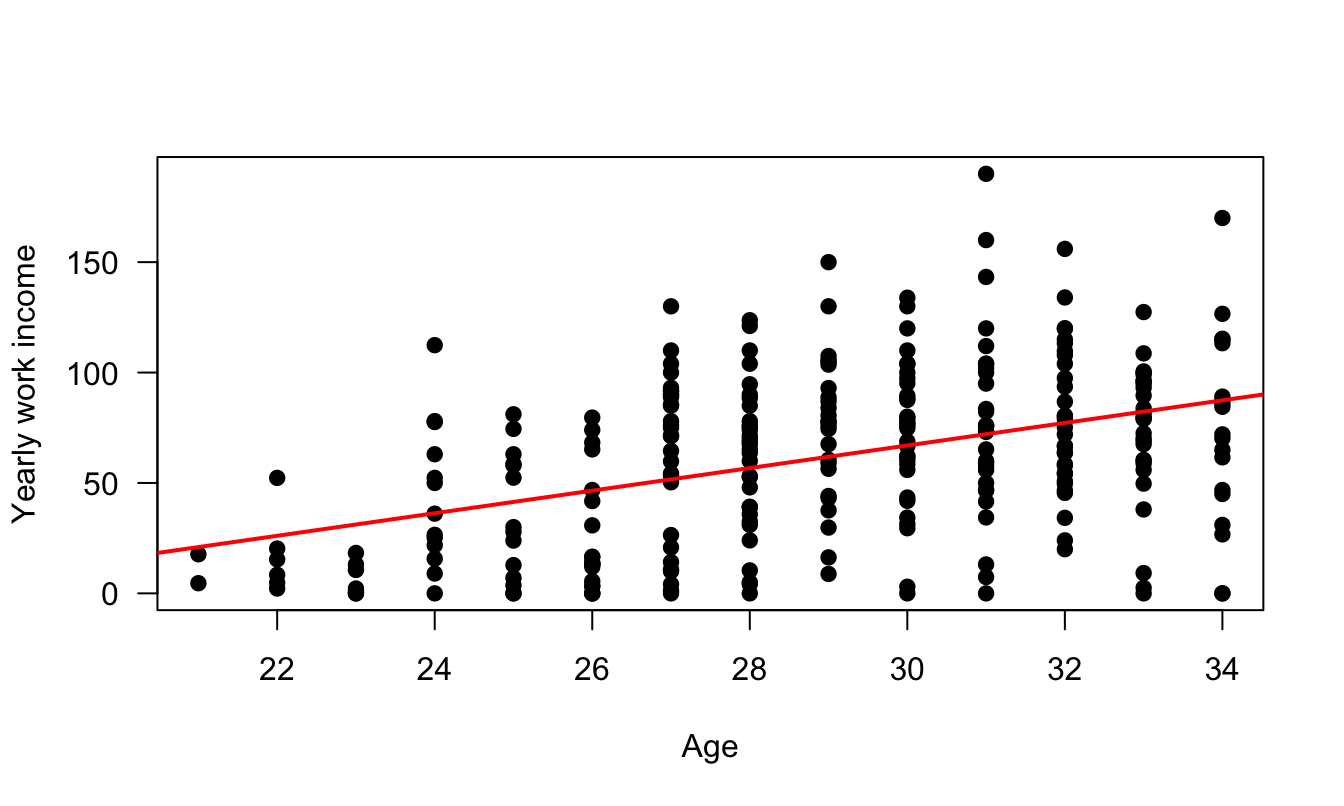

Figure 1.2 shows a real-data situation of heteroskedasticity, based on data taken from the Swiss Household Panel. The sample is restricted to persons (i) that are younger than 35 year in 2019, and (ii) that have completed at least 19 years of study. The figure shows that the dispersion of yearly income increases with age.

##

## 8 9 10 12 13 14 16 19 21

## 70 325 350 1985 454 117 990 1263 168

shp_higherEd <- subset(shp,(edyear19>18)&age19<35)

plot(i19wyg/1000~age19,data=shp_higherEd,pch=19,las=1,

xlab="Age",ylab="Yearly work income")

abline(lm(i19wyg/1000~age19,data=shp_higherEd),col="red",lwd=2)

Figure 1.2: Income versus age. Data are from the Swiss Household Panel. The sample is restricted to persons that have completed at least 19 years of study. The figure shows that the dispersion of yearly income increases with age.

The next assumption concerns the correlation of the errors across entities.

Hypothesis 1.4 (Uncorrelated errors) \[ \forall i \ne j, \quad \mathbb{C}ov(\varepsilon_i,\varepsilon_j|\mathbf{X})=0. \]

We will often need to work with the covariance matrix of the errors. Proposition 1.2 give the specific form of the covariance matrix of the errors —conditional on \(\mathbf{X}\)— when both Hypotheses 1.3 and 1.4 are satisfied:

Proposition 1.2 If Hypotheses 1.3 and 1.4 hold, then: \[ \mathbb{V}ar(\boldsymbol\varepsilon|\mathbf{X})= \sigma^2 Id, \] where \(Id\) is the \(n \times n\) identity matrix.

We will sometimes assume that errors are Gaussian—or normal. We will then invoke Hypothesis 1.5:

Hypothesis 1.5 (Normal distribution) \[ \forall i, \quad \varepsilon_i \sim \mathcal{N}(0,\sigma^2). \]

1.2 Least square estimation

1.2.1 Derivation of the OLS formula

In this section, we will present and study the properties of the most popular estimation approach, namely the Ordinary Least Squares (OLS) approach. As suggested by its name, the OLS estimator of \(\boldsymbol\beta\) is defined as the vector \(\mathbf{b}\) that minimizes the sum of squared residuals. (The residuals are the estimates of the errors \(\varepsilon_i\).)

For a given vector of coefficients \(\mathbf{b}=[b_1,\dots,b_K]'\), the sum of squared residuals is: \[ f(\mathbf{b}) =\sum_{i=1}^n \left(y_i - \sum_{j=1}^K x_{i,j} b_j \right)^2 = \sum_{i=1}^n (y_i - \mathbf{x}_i' \mathbf{b})^2. \] Minimizing this sum amounts to minimizing: \[ f(\mathbf{b}) = (\mathbf{y} - \mathbf{X}\mathbf{b})'(\mathbf{y} - \mathbf{X}\mathbf{b}). \]

Since:1 \[ \frac{\partial f}{\partial \mathbf{b}}(\mathbf{b}) = - 2 \mathbf{X}'\mathbf{y} + 2 \mathbf{X}'\mathbf{X}\mathbf{b}, \] it comes that a necessary first-order condition (FOC) is: \[\begin{equation} \mathbf{X}'\mathbf{X}\mathbf{b} = \mathbf{X}'\mathbf{y}.\tag{1.2} \end{equation}\] Under Assumption 1.1, \(\mathbf{X}'\mathbf{X}\) is invertible. Hence: \[ \boxed{\mathbf{b} = (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}'\mathbf{y}.} \] Vector \(\mathbf{b}\) minimizes the sum of squared residuals. (\(f\) is a non-negative quadratic function, it therefore admits a minimum.)

We have: \[ \mathbf{y} = \underbrace{\mathbf{X}\mathbf{b}}_{\mbox{fitted values } (\hat{\mathbf{y}})} + \underbrace{\mathbf{e}}_{\mbox{residuals}} \]

The estimated residuals are: \[\begin{equation} \mathbf{e} = \mathbf{y} - \mathbf{X} (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{y} = \mathbf{M} \mathbf{y},\tag{1.3} \end{equation}\] where \(\mathbf{M} := Id - \mathbf{X} (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}'\) is called the residual maker matrix.

Moreover, the fitted values \(\hat{\mathbf{y}}\) are given by: \[\begin{equation} \hat{\mathbf{y}}=\mathbf{X} (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{y} = \mathbf{P} \mathbf{y},\tag{1.4} \end{equation}\] where \(\mathbf{P}=\mathbf{X} (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}'\) is a projection matrix.

These matrices \(\mathbf{M}\) and \(\mathbf{P}\) are such that:

- \(\mathbf{M} \mathbf{X} = \mathbf{0}\): if one regresses one of the explanatory variables on \(\mathbf{X}\), the residuals are null.

- \(\mathbf{M}\mathbf{y}=\mathbf{M}\boldsymbol\varepsilon\) (because \(\mathbf{y} = \mathbf{X}\boldsymbol\beta + \boldsymbol\varepsilon\) and \(\mathbf{M} \mathbf{X} = \mathbf{0}\)).

Here are some additional properties of \(\mathbf{M}\) and \(\mathbf{P}\):

- \(\mathbf{M}\) is symmetric (\(\mathbf{M} = \mathbf{M}'\)) and idempotent (\(\mathbf{M} = \mathbf{M}^2 = \mathbf{M}^k\) for \(k>0\)).

- \(\mathbf{P}\) is symmetric and idempotent.

- \(\mathbf{P}\mathbf{X} = \mathbf{X}\).

- \(\mathbf{P} \mathbf{M} = \mathbf{M} \mathbf{P} = 0\).

- \(\mathbf{y} = \mathbf{P}\mathbf{y} + \mathbf{M}\mathbf{y}\) (decomposition of \(\mathbf{y}\) into two orthogonal parts).

It is easily checked that \(\mathbf{X}'\mathbf{e}=0\). Each column of \(\mathbf{X}\) is therefore orthogonal to \(\mathbf{e}\). In particular, if an intercept is included in the regression (\(x_{i,1} \equiv 1\) for all \(i\)’s, i.e., the first column of \(\mathbf{X}\) is filled with ones), the average of the residuals is null.

Example 1.1 (Bivariate case) Consider a bivariate situation, where we regress \(y_i\) on a constant and an explanatory variable \(w_i\). We have \(K=2\), and \(\mathbf{X}\) is a \(n \times 2\) matrix whose \(i^{th}\) row is \([x_{i,1},x_{i,2}]\), with \(x_{i,1}=1\) (to account for the intercept) and with \(w_i = x_{i,2}\) (say).

We have: \[\begin{eqnarray*} \mathbf{X}'\mathbf{X} &=& \left[\begin{array}{cc} n & \sum_i w_i \\ \sum_i w_i & \sum_i w_i^2 \end{array} \right],\\ (\mathbf{X}'\mathbf{X})^{-1} &=& \frac{1}{n\sum_i w_i^2-(\sum_i w_i)^2} \left[\begin{array}{cc} \sum_i w_i^2 & -\sum_i w_i \\ -\sum_i w_i & n \end{array} \right],\\ (\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}'\mathbf{y} &=& \frac{1}{n\sum_i w_i^2-(\sum_i w_i)^2} \left[\begin{array}{c} \sum_i w_i^2\sum_i y_i -\sum_i w_i \sum_i w_iy_i \\ -\sum_i w_i \sum_i y_i + n \sum_i w_i y_i \end{array} \right]\\ &=& \frac{1}{\frac{1}{n}\sum_i(w_i - \bar{w})^2} \left[\begin{array}{c} \frac{\bar{y}}{n}\sum_i w_i^2 -\frac{\bar{w}}{n}\sum_i w_iy_i \\ \frac{1}{n}\sum_i (w_i-\bar{w})(y_i-\bar{y}) \end{array} \right]. \end{eqnarray*}\]

It can be seen that the second element of \(\mathbf{b}=(\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}'\mathbf{y}\) is: \[ b_2 = \frac{\overline{\mathbb{C}ov(W,Y)}}{\overline{\mathbb{V}ar(W)}}, \] where \(\overline{\mathbb{C}ov(W,Y)}\) and \(\overline{\mathbb{V}ar(W)}\) are sample estimates.

Since there is a constant in the regression, we have \(b_1 = \bar{y} - b_2 \bar{w}\).

1.2.2 Properties of the OLS estimate (small sample)

The OLS properties stated in Proposition 1.3 are valid for any sample size \(n\):

Proposition 1.3 (Properties of the OLS estimator) We have:

Proof. Under Hypothesis 1.1, \(\mathbf{X}'\mathbf{X}\) can be inverted. We have: \[ \mathbf{b} = (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}'\mathbf{y} = \boldsymbol\beta + (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{\varepsilon}. \]

- Let us consider the expectation of the last term, i.e. \(\mathbb{E}((\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{\varepsilon})\). Using the law of iterated expectations, we obtain: \[ \mathbb{E}((\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{\varepsilon}) = \mathbb{E}(\mathbb{E}[(\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{\varepsilon}|\mathbf{X}]) = \mathbb{E}((\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}'\mathbb{E}[\mathbf{\varepsilon}|\mathbf{X}]). \] By Hypothesis 1.2, we have \(\mathbb{E}[\mathbf{\varepsilon}|\mathbf{X}]=0\). Hence \(\mathbb{E}((\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{\varepsilon}) =0\) and result (i) follows.

- \(\mathbb{V}ar(\mathbf{b}|\mathbf{X}) = (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbb{E}(\boldsymbol\varepsilon\boldsymbol\varepsilon'|\mathbf{X}) \mathbf{X} (\mathbf{X}'\mathbf{X})^{-1}\). By Prop. 1.2, if 1.3 and 1.4 hold, then we have \(\mathbb{E}(\boldsymbol\varepsilon\boldsymbol\varepsilon'|\mathbf{X})=\mathbb{V}ar(\boldsymbol\varepsilon|\mathbf{X})=\sigma^2 Id\).

Together, Hypotheses 1.1 to 1.4 form the so-called Gauss-Markov set of assumptions. Under these assumptions, the OLS estimator feature the lowest possible variance within the family of linear unbiased estimates of \(\boldsymbol\beta\):

Theorem 1.1 (Gauss-Markov Theorem) Under Assumptions 1.1 to 1.4, for any vector \(w\), the minimum-variance linear unbiased estimator of \(w' \boldsymbol\beta\) is \(w' \mathbf{b}\), where \(\mathbf{b}\) is the least squares estimator. (BLUE: Best Linear Unbiased Estimator.)

Proof. Consider \(\mathbf{b}^* = C \mathbf{y}\), another linear unbiased estimator of \(\boldsymbol\beta\). Since it is unbiased, we must have \(\mathbb{E}(C\mathbf{y}|\mathbf{X}) = \mathbb{E}(C\mathbf{X}\boldsymbol\beta + C\boldsymbol\varepsilon|\mathbf{X}) = \boldsymbol\beta\). We have \(\mathbb{E}(C\boldsymbol\varepsilon|\mathbf{X})=C\mathbb{E}(\boldsymbol\varepsilon|\mathbf{X})=0\) (by 1.2). Therefore \(\mathbf{b}^*\) is unbiased if \(\mathbb{E}(C\mathbf{X})\boldsymbol\beta=\boldsymbol\beta\). This has to be the case for any \(\boldsymbol\beta\), which implies that we must have \(C\mathbf{X}=Id\). Let us compute \(\mathbb{V}ar(\mathbf{b^*}|\mathbf{X})\). For this, we introduce \(D = C - (\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}'\), which is such that \(D\mathbf{y}=\mathbf{b}^*-\mathbf{b}\). The fact that \(C\mathbf{X}=Id\) implies that \(D\mathbf{X} = \mathbf{0}\). We have \(\mathbb{V}ar(\mathbf{b^*}|\mathbf{X}) = \mathbb{V}ar(C \mathbf{y}|\mathbf{X}) =\mathbb{V}ar(C \boldsymbol\varepsilon|\mathbf{X}) = \sigma^2CC'\) (by Assumptions 1.3 and 1.4, see Prop. 1.2). Using \(C=D+(\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}'\) and exploiting the fact that \(D\mathbf{X} = \mathbf{0}\) leads to: \[ \mathbb{V}ar(\mathbf{b^*}|\mathbf{X}) =\sigma^2\left[(D+(\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}')(D+(\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}')'\right] = \mathbb{V}ar(\mathbf{b}|\mathbf{X}) + \sigma^2 \mathbf{D}\mathbf{D}'. \] Therefore, we have \[\begin{eqnarray*} &&\mathbb{V}ar(w'\mathbf{b^*}|\mathbf{X})=w'\mathbb{V}ar(\mathbf{b}|\mathbf{X})w + \sigma^2 w'\mathbf{D}\mathbf{D}'w\\ &\ge& w'\mathbb{V}ar(\mathbf{b}|\mathbf{X})w=\mathbb{V}ar(w'\mathbf{b}|\mathbf{X}). \end{eqnarray*}\]

The Frish-Waugh theorem (Theorem 1.2) reveals the relationship between the OLS estimator and the notion of partial correlation coefficient. Consider the linear least square regression of \(\mathbf{y}\) on \(\mathbf{X}\). We introduce the notations:

- \(\mathbf{b}^{\mathbf{y}/\mathbf{X}}\): OLS estimates of \(\boldsymbol\beta\),

- \(\mathbf{M}^{\mathbf{X}}\): residual-maker matrix of any regression on \(\mathbf{X}\) (given by \(Id - \mathbf{X} (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}'\)),

- \(\mathbf{P}^{\mathbf{X}}\): projection matrix of any regression on \(\mathbf{X}\) (given by \(\mathbf{X} (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}'\)).

Let us split the set of explanatory variables into two: \(\mathbf{X} = [\mathbf{X}_1,\mathbf{X}_2]\). With obvious notations: \(\mathbf{b}^{\mathbf{y}/\mathbf{X}}=[\mathbf{b}_1',\mathbf{b}_2']'\).

Theorem 1.2 (Frisch-Waugh Theorem) We have: \[ \mathbf{b}_2 = \mathbf{b}^{\mathbf{M^{\mathbf{X}_1}y}/\mathbf{M^{\mathbf{X}_1}\mathbf{X}_2}}. \]

Proof. The minimization of the least squares leads to (these are first-order conditions, see Eq. (1.2)): \[ \left[ \begin{array}{cc} \mathbf{X}_1'\mathbf{X}_1 & \mathbf{X}_1'\mathbf{X}_2 \\ \mathbf{X}_2'\mathbf{X}_1 & \mathbf{X}_2'\mathbf{X}_2\end{array}\right] \left[ \begin{array}{c} \mathbf{b}_1 \\ \mathbf{b}_2\end{array}\right] = \left[ \begin{array}{c} \mathbf{X}_1' \mathbf{y} \\ \mathbf{X}_2' \mathbf{y} \end{array}\right]. \] Use the first-row block of equations to solve for \(\mathbf{b}_1\) first; it comes as a function of \(\mathbf{b}_2\). Then use the second set of equations to solve for \(\mathbf{b}_2\), which leads to: \[\begin{eqnarray*} \mathbf{b}_2 &=& [\mathbf{X}_2'\mathbf{X}_2 - \mathbf{X}_2'\mathbf{X}_1(\mathbf{X}_1'\mathbf{X}_1)\mathbf{X}_1'\mathbf{X}_2]^{-1}\mathbf{X}_2'(Id - \mathbf{X}_1(\mathbf{X}_1'\mathbf{X}_1)\mathbf{X}_1')\mathbf{y}\\ &=& [\mathbf{X}_2' \mathbf{M}^{\mathbf{X}_1}\mathbf{X}_2]^{-1}\mathbf{X}_2'\mathbf{M}^{\mathbf{X}_1}\mathbf{y}. \end{eqnarray*}\] Using the fact that \(\mathbf{M}^{\mathbf{X}_1}\) is idempotent and symmetric leads to the result.

This suggests a second way of estimating \(\mathbf{b}_2\):

- Regress \(Y\) on \(X_1\), regress \(X_2\) on \(X_1\).

- Regress the residuals associated with the former regression on those associated with the latter regressions.

This is illustrated by the following code, where we run different regressions involving the number of Google searches for “parapluie” (umbrella in French). In the broad specification, we regress it on French precipitations and month dummies. Next, we deseasonalize both the dependent variable and the precipitations by regressing them on the month dummies. As stated by Theorem 1.2, regressing deseasonalized Google searches on deseasonalized precipitations give the same coefficient as in the baseline regression.

library(AEC)

dummies <- as.matrix(parapluie[,4:14])

eq_all <- lm(parapluie~dummies+precip,data=parapluie)

deseas_parapluie <- lm(parapluie~dummies,data=parapluie)$residuals

deseas_precip <- lm(precip~dummies,data=parapluie)$residuals

eq_frac <- lm(deseas_parapluie~deseas_precip-1)

stargazer::stargazer(eq_all,eq_frac,omit=c(1:11,"Constant"),type="text",

omit.stat = c("f","ser"),digits=5,

add.lines=list(c('Monthly dummy','Yes','No')))##

## ==========================================

## Dependent variable:

## ----------------------------

## parapluie deseas_parapluie

## (1) (2)

## ------------------------------------------

## precip 0.13001***

## (0.03594)

##

## deseas_precip 0.13001***

## (0.03277)

##

## ------------------------------------------

## Monthly dummy Yes No

## Observations 72 72

## R2 0.51793 0.18148

## Adjusted R2 0.41988 0.16995

## ==========================================

## Note: *p<0.1; **p<0.05; ***p<0.01When \(b_2\) is scalar (and then \(\mathbf{X}_2\) is of dimension \(n \times 1\)), Theorem 1.2 gives the expression of the partial regression coefficient \(b_2\): \[ b_2 = \frac{\mathbf{X}_2'M^{\mathbf{X}_1}\mathbf{y}}{\mathbf{X}_2'M^{\mathbf{X}_1}\mathbf{X}_2}. \]

1.2.3 Goodness of fit

Define the total variation in \(y\) as the sum of squared deviations (from the sample mean): \[ TSS = \sum_{i=1}^{n} (y_i - \bar{y})^2. \] We have: \[ \mathbf{y} = \mathbf{X}\mathbf{b} + \mathbf{e} = \hat{\mathbf{y}} + \mathbf{e} \] In the following, we assume that the regression includes a constant (i.e. for all \(i\), \(x_{i,1}=1\)). Denote by \(\mathbf{M}^0\) the matrix that transforms observations into deviations from sample means. Using that \(\mathbf{M}^0 \mathbf{e} = \mathbf{e}\) and that \(\mathbf{X}' \mathbf{e}=0\), we have: \[\begin{eqnarray*} \underbrace{\mathbf{y}'\mathbf{M}^0\mathbf{y}}_{\mbox{Total sum of sq.}} &=& (\mathbf{X}\mathbf{b} + \mathbf{e})' \mathbf{M}^0 (\mathbf{X}\mathbf{b} + \mathbf{e})\\ &=& \underbrace{\mathbf{b}' \mathbf{X}' \mathbf{M}^0 \mathbf{X}\mathbf{b}}_{\mbox{"Explained" sum of sq.}} + \underbrace{\mathbf{e}'\mathbf{e}}_{\mbox{Sum of sq. residuals}}\\ TSS &=& Expl.SS + SSR. \end{eqnarray*}\]

We can now define the coefficient of determination: \[\begin{equation} \boxed{\mbox{Coefficient of determination} = \frac{Expl.SS}{TSS} = 1 - \frac{SSR}{TSS} = 1 - \frac{\mathbf{e}'\mathbf{e}}{\mathbf{y}'\mathbf{M}^0\mathbf{y}}.}\tag{1.5} \end{equation}\]

It can be shown (Greene (2003), Section 3.5) that: \[ \mbox{Coefficient of determination} = \frac{[\sum_{i=1}^n(y_i - \bar{y})(\hat{y_i} - \bar{y})]^2}{\sum_{i=1}^n(y_i - \bar{y})^2 \sum_{i=1}^n(\hat{y_i} - \bar{y})^2}. \] That is, the \(R^2\) is the sample squared correlation between \(y\) and the (regression-implied) \(y\)’s predictions.

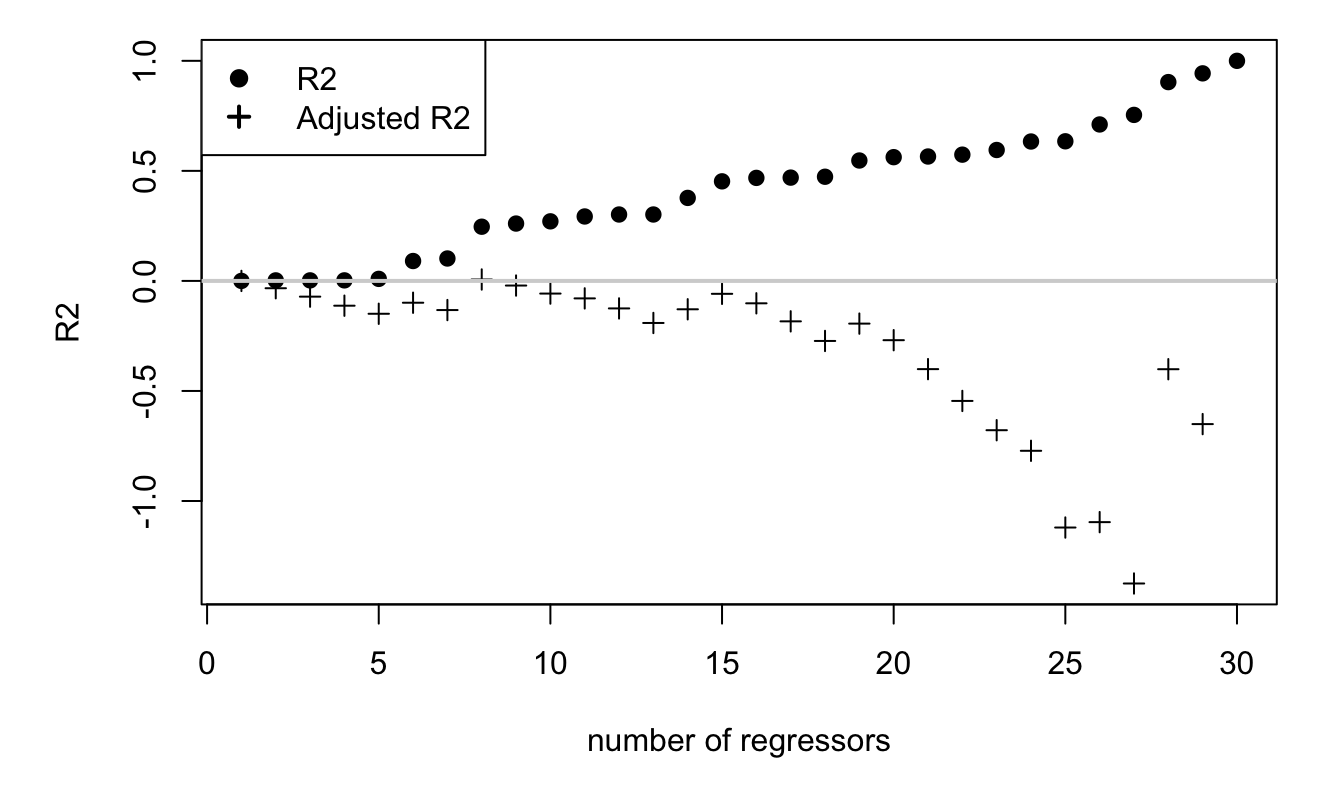

The hgher the \(R^2\), the higher the goodness of fit of a model. One however has to be cautious with \(R^2\). Indeed, it is easy to increase it: it suffices to add explanatory variables. As stated by Proposition 1.5, adding an explanatory variable (even if it does not truly relate to the dependent variable) mechanically results in an increase in the \(R^2\). In the limit, taking any set of \(n\) non-linearly-dependent explanatory variables (i.e., variables satisfying Hypothesis 1.1) results in a \(R^2\) equal to one.

Proposition 1.4 (Change in SSR when a variable is added) We have: \[\begin{equation} \mathbf{u}'\mathbf{u} = \mathbf{e}'\mathbf{e} - c^2(\mathbf{z^*}'\mathbf{z^*}) \qquad (\le \mathbf{e}'\mathbf{e}) \tag{1.6} \end{equation}\] where (i) \(\mathbf{u}\) and \(\mathbf{e}\) are the residuals in the regressions of \(\mathbf{y}\) on \([\mathbf{X},\mathbf{z}]\) and of \(\mathbf{y}\) on \(\mathbf{X}\), respectively, (ii) \(c\) is the regression coefficient on \(\mathbf{z}\) in the former regression and where \(\mathbf{z}^*\) are the residuals in the regression of \(\mathbf{z}\) on \(\mathbf{X}\).

Proof. The OLS estimates \([\mathbf{d}',\mathbf{c}]'\) in the regression of \(\mathbf{y}\) on \([\mathbf{X},\mathbf{z}]\) satisfies (first-order cond., Eq. (1.2)): \[ \left[ \begin{array}{cc} \mathbf{X}'\mathbf{X} & \mathbf{X}'\mathbf{z} \\ \mathbf{z}'\mathbf{X} & \mathbf{z}'\mathbf{z}\end{array}\right] \left[ \begin{array}{c} \mathbf{d} \\ c\end{array}\right] = \left[ \begin{array}{c} \mathbf{X}' \mathbf{y} \\ \mathbf{z}' \mathbf{y} \end{array}\right]. \] Hence, in particular \(\mathbf{d} = \mathbf{b} - (\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}'\mathbf{z}c\), where \(\mathbf{b}\) is the OLS of \(\mathbf{y}\) on \(\mathbf{X}\). Substituting in \(\mathbf{u} = \mathbf{y} - \mathbf{X}\mathbf{d} - \mathbf{z}c\), we get \(\mathbf{u} = \mathbf{e} - \mathbf{z}^*c\). We therefore have: \[\begin{equation} \mathbf{u}'\mathbf{u} = (\mathbf{e} - \mathbf{z}^*c)(\mathbf{e} - \mathbf{z}^*c)= \mathbf{e}'\mathbf{e} + c^2(\mathbf{z^*}'\mathbf{z^*}) - 2 c\mathbf{z^*}'\mathbf{e}.\tag{1.7} \end{equation}\] Now \(\mathbf{z^*}'\mathbf{e} = \mathbf{z^*}'(\mathbf{y} - \mathbf{X}\mathbf{b}) = \mathbf{z^*}'\mathbf{y}\) because \(\mathbf{z}^*\) are the residuals in an OLS regression on \(\mathbf{X}\). Since \(c = (\mathbf{z^*}'\mathbf{z^*})^{-1}\mathbf{z^*}'\mathbf{y^*}\) (by an application of Theorem 1.2), we have \((\mathbf{z^*}'\mathbf{z^*})c = \mathbf{z^*}'\mathbf{y^*}\) and, therefore, \(\mathbf{z^*}'\mathbf{e} = (\mathbf{z^*}'\mathbf{z^*})c\). Inserting this in Eq. (1.7) leads to the results.

Proposition 1.5 (Change in the coefficient of determination when a variable is added) Denoting by \(R_W^2\) the coefficient of determination in the regression of \(\mathbf{y}\) on some variable \(\mathbf{W}\), we have: \[ R_{\mathbf{X},\mathbf{z}}^2 = R_{\mathbf{X}}^2 + (1-R_{\mathbf{X}}^2)(r_{yz}^\mathbf{X})^2, \] where \(r_{yz}^\mathbf{X}\) is the coefficient of partial correlation (see Definition 8.5).

Proof. Let’s use the same notations as in Prop. 1.4. Theorem 1.2 implies that \(c = (\mathbf{z^*}'\mathbf{z^*})^{-1}\mathbf{z^*}'\mathbf{y^*}\). Using this in Eq. (1.6) gives \(\mathbf{u}'\mathbf{u} = \mathbf{e}'\mathbf{e} - (\mathbf{z^*}'\mathbf{y^*})^2/(\mathbf{z^*}'\mathbf{z^*})\). Using the definition of the partial correlation (Eq. (8.1)), we get \(\mathbf{u}'\mathbf{u} = \mathbf{e}'\mathbf{e}\left(1 - (r_{yz}^\mathbf{X})^2\right)\). The results is obtained by dividing both sides of the previous equation by \(\mathbf{y}'\mathbf{M}_0\mathbf{y}\).

Figure 1.3, below, illustrates the fact that one can obtain an \(R^2\) of one by regressing a sample of length \(n\) on any set of \(n\) linearly-independent variables.

n <- 30;Y <- rnorm(n);X <- matrix(rnorm(n^2),n,n)

all_R2 <- NULL;all_adjR2 <- NULL

for(j in 0:(n-1)){

if(j==0){eq <- lm(Y~1)}else{eq <- lm(Y~X[,1:j])}

all_R2 <- c(all_R2,summary(eq)$r.squared)

all_adjR2 <- c(all_adjR2,summary(eq)$adj.r.squared)

}

par(plt=c(.15,.95,.25,.95))

plot(all_R2,pch=19,ylim=c(min(all_adjR2,na.rm = TRUE),1),

xlab="number of regressors",ylab="R2")

points(all_adjR2,pch=3);abline(h=0,col="light grey",lwd=2)

legend("topleft",c("R2","Adjusted R2"),

lty=NaN,col=c("black"),pch=c(19,3),lwd=2)

Figure 1.3: This figure illustrates the monotonous increase in the \(R^2\) as a function of the number of explanatory variables. In the true model, there is no explanatory variables, i.e., \(y_i = \varepsilon_i\). We then take (independent) regressors and regress \(y\) on the latter, progressively increasing the set of regressors.

In order to address the risk of adding irrelevant explanatory variables, measures of adjusted \(R^2\) have been proposed. Compared to the standard \(R^2\), these measures add penalties that depend on the number of covariates employed in the regression. A common adjusted \(R^2\) measure, denoted by \(\bar{R}^2\), is the following: \[\begin{equation*} \boxed{\bar{R}^2 = 1 - \frac{\mathbf{e}'\mathbf{e}/(n-K)}{\mathbf{y}'\mathbf{M}^0\mathbf{y}/(n-1)} = 1 - \frac{n-1}{n-K}(1-R^2).} \end{equation*}\]

1.2.4 Inference and confidence intervals (in small sample)

Under the normality assumption (Assumption 1.5), we know the distribution of \(\mathbf{b}\) (conditional on \(\mathbf{X}\)). Indeed, \(\mathbf{b} = \boldsymbol\beta + (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}'\boldsymbol\varepsilon\). Therefore, conditional on \(\mathbf{X}\), vector \(\mathbf{b}\) is an affine combination of Gaussian variables—the components of \(\boldsymbol\varepsilon\). As a result, it is also Gaussian. Its distribution is therefore completely characterized by its mean and variance, and we have: \[\begin{equation} \mathbf{b}|\mathbf{X} \sim \mathcal{N}(\boldsymbol\beta,\sigma^2(\mathbf{X}'\mathbf{X})^{-1}).\tag{1.8} \end{equation}\]

Eq. (1.8) can be used to conduct inference and tests. However, in practice, we do not know \(\sigma^2\) (which is a population parameter). The following proposition gives an unbiased estimate of \(\sigma^2\).

Proposition 1.6 Under 1.1 to 1.4, an unbiased estimate of \(\sigma^2\) is given by: \[\begin{equation} s^2 = \frac{\mathbf{e}'\mathbf{e}}{n-K}.\tag{1.9} \end{equation}\] (It is sometimes denoted by \(\sigma^2_{OLS}\).)

Proof. We have: \[\begin{eqnarray*} \mathbb{E}(\mathbf{e}'\mathbf{e}|\mathbf{X})&=&\mathbb{E}(\boldsymbol{\varepsilon}'\mathbf{M}\boldsymbol{\varepsilon}|\mathbf{X})=\mathbb{E}(\mbox{Tr}(\boldsymbol{\varepsilon}'\mathbf{M}\boldsymbol{\varepsilon})|\mathbf{X}))\\ &=&\mbox{Tr}(\mathbf{M}\mathbb{E}(\boldsymbol{\varepsilon}\boldsymbol{\varepsilon}'|\mathbf{X}))=\sigma^2 \mbox{Tr}(\mathbf{M}). \end{eqnarray*}\] (Note that we have \(\mathbb{E}(\boldsymbol{\varepsilon}\boldsymbol{\varepsilon}'|\mathbf{X})=\sigma^2Id\) by Assumptions 1.3 and 1.4, see Prop. 1.2.) Moreover: \[\begin{eqnarray*} \mbox{Tr}(\mathbf{M})&=&n-\mbox{Tr}(\mathbf{X}(\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}')\\ &=&n-\mbox{Tr}((\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}'\mathbf{X})=n-\mbox{Tr}(Id_{K \times K}), \end{eqnarray*}\] which leads to the result.

Two results will prove important to produce inference:

- We know the conditional distribution of \(s^2\) (Prop. 1.7).

- \(s^2\) and \(\mathbf{b}\) are independent random variables (Prop. 1.8).

Proposition 1.7 Under 1.1 to 1.5, we have: \(\dfrac{s^2}{\sigma^2} | \mathbf{X} \sim \frac{1}{n-K}\chi^2(n-K)\).

Proof. We have \(\mathbf{e}'\mathbf{e}=\boldsymbol\varepsilon'\mathbf{M}\boldsymbol\varepsilon\). \(\mathbf{M}\) is an idempotent symmetric matrix. Therefore it can be decomposed as \(PDP'\) where \(D\) is a diagonal matrix and \(P\) is an orthogonal matrix. As a result \(\mathbf{e}'\mathbf{e} = (P'\boldsymbol\varepsilon)'D(P'\boldsymbol\varepsilon)\), i.e. \(\mathbf{e}'\mathbf{e}\) is a weighted sum of independent squared Gaussian variables (the entries of \(P'\boldsymbol\varepsilon\) are independent because they are Gaussian —under 1.5— and uncorrelated). The variance of each of these i.i.d. Gaussian variable is \(\sigma^2\). Because \(\mathbf{M}\) is an idempotent symmetric matrix, its eigenvalues are either 0 or 1, and its rank equals its trace (see Propositions 8.3 and 8.4). Further, its trace is equal to \(n-K\) (see proof of Eq. (1.9)). Therefore \(D\) has \(n-K\) entries equal to 1 and \(K\) equal to 0. Hence, \(\mathbf{e}'\mathbf{e} = (P'\boldsymbol\varepsilon)'D(P'\boldsymbol\varepsilon)\) is a sum of \(n-K\) squared independent Gaussian variables of variance \(\sigma^2\). Therefore \(\frac{\mathbf{e}'\mathbf{e}}{\sigma^2} = (n-K)\frac{s^2}{\sigma^2}\) is a sum of \(n-k\) squared i.i.d. standard normal variables. The result follows by the definition of the chi-square distribution (see Def. 8.13).

Proof. We have \(\mathbf{b}=\boldsymbol\beta + [\mathbf{X}'{\mathbf{X}}]^{-1}\mathbf{X}\boldsymbol\varepsilon\) and \(s^2 = \boldsymbol\varepsilon' \mathbf{M} \boldsymbol\varepsilon/(n-K)\). Hence \(\mathbf{b}\) is an affine combination of \(\boldsymbol\varepsilon\) and \(s^2\) is a quadratic combination of the same Gaussian shocks. One can write \(s^2\) as \(s^2 = (\mathbf{M}\boldsymbol\varepsilon)' \mathbf{M} \boldsymbol\varepsilon/(n-K)\) and \(\mathbf{b}\) as \(\boldsymbol\beta + \mathbf{T}\boldsymbol\varepsilon\). Since \(\mathbf{T}\mathbf{M}=0\), \(\mathbf{T}\boldsymbol\varepsilon\) and \(\mathbf{M}\boldsymbol\varepsilon\) are independent (because two uncorrelated Gaussian variables are independent), therefore \(\mathbf{b}\) and \(s^2\), which are functions of two sets of independent variables, are independent.

Consistently with Eq. (1.8), under Hypotheses 1.1 to 1.5, the \(k^{th}\) entry of \(\mathbf{b}\) satisfies: \[ b_k | \mathbf{X} \sim \mathcal{N}(\beta_k,\sigma^2 v_k), \] where \(v_k\) is the k\(^{th}\) component of the diagonal of \((\mathbf{X}'\mathbf{X})^{-1}\).

Moreover, we have (Prop. 1.7): \[ \frac{(n-K)s^2}{\sigma^2} | \mathbf{X} \sim \chi ^2 (n-K). \]

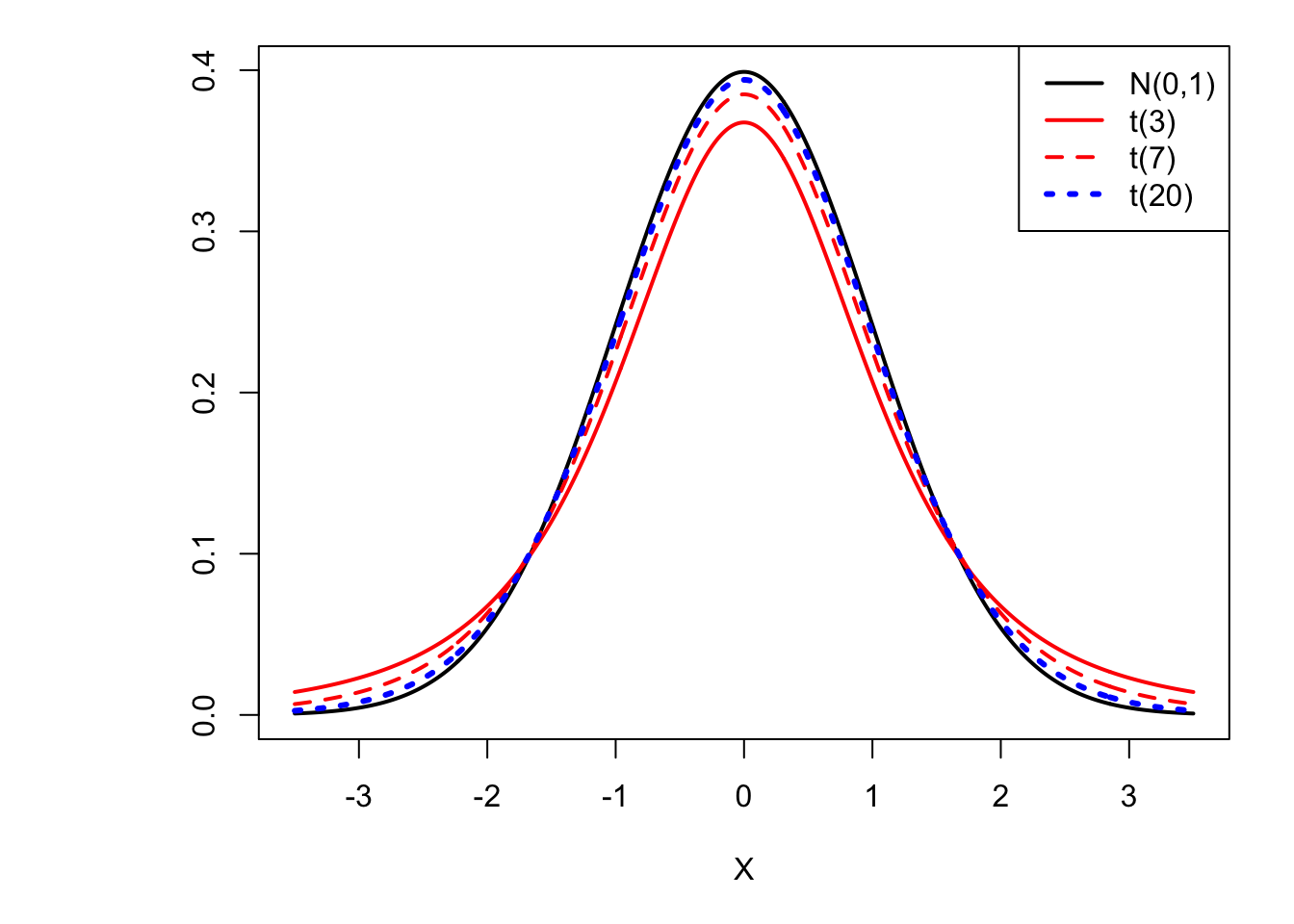

As a result (using Propositions 1.7 and 1.8), we have: \[\begin{equation} \boxed{t_k = \frac{\frac{b_k - \beta_k}{\sqrt{\sigma^2 v_k}}}{\sqrt{\frac{(n-K)s^2}{\sigma^2(n-K)}}} = \frac{b_k - \beta_k}{\sqrt{s^2v_k}} \sim t(n-K),}\tag{1.10} \end{equation}\] where \(t(n-K)\) denotes a Student \(t\) distribution with \(n-K\) degrees of freedom (see Def. 8.12).2

Note that \(s^2 v_k\) is not exactly the conditional variance of \(b_k\): The variance of \(b_k\) conditional on \(\mathbf{X}\) is \(\sigma^2 v_k\). However \(s^2 v_k\) is an unbiased estimate of \(\sigma^2 v_k\) (by Prop. 1.6).

The previous result (Eq. (1.10)) can be extended to any linear combinations of elements of \(\mathbf{b}\). (Eq. (1.10) is for its \(k^{th}\) component only.) Let us consider \(\boldsymbol\alpha'\mathbf{b}\), the OLS estimate of \(\boldsymbol\alpha'\boldsymbol\beta\). From Eq. (1.8), we have: \[ \boldsymbol\alpha'\mathbf{b} | \mathbf{X} \sim \mathcal{N}(\boldsymbol\alpha'\boldsymbol\beta,\sigma^2 \boldsymbol\alpha'(\mathbf{X}'\mathbf{X})^{-1}\boldsymbol\alpha). \] Therefore: \[ \frac{\boldsymbol\alpha'\mathbf{b} - \boldsymbol\alpha'\boldsymbol\beta}{\sqrt{\sigma^2 \boldsymbol\alpha'(\mathbf{X}'\mathbf{X})^{-1}\boldsymbol\alpha}} | \mathbf{X} \sim \mathcal{N}(0,1). \] Using the same approach as the one used to derive Eq. (1.10), one can show that Props. 1.7 and 1.8 also imply that: \[\begin{equation} \boxed{\frac{\boldsymbol\alpha'\mathbf{b} - \boldsymbol\alpha'\boldsymbol\beta}{\sqrt{s^2\boldsymbol\alpha'(\mathbf{X}'\mathbf{X})^{-1}\boldsymbol\alpha}} \sim t(n-K).}\tag{1.11} \end{equation}\]

Figure 1.4: The higher the degree of freedom, the closer the distribution of \(t(\nu)\) gets to the normal distribution. (Convergence in distribution.)

What precedes is widely exploited for statistical inference in the context of linear regressions. Indeed, Eq. (1.10) gives a sense of the distances between \(b_k\) and \(\beta_k\) that can be deemed as “likely” (or, conversely, “unlikely”). For instance, it implies that, if \(\sqrt{v_k s^2}\) is equal to 1 (say), then the probability to obtain \(b_k\) smaller than \(\beta_k-\) 4.587 \(\times \sqrt{v_k s^2}\) or larger than \(\beta_k+\) 4.587 \(\times \sqrt{v_k s^2}\) is equal to 0.1% when \(n-K=10\).

That means for instance that, under the assumption that \(\beta_k=0\), it would be extremely unlikely to have obtained \(b_k/\sqrt{v_k s^2}\) smaller than -4.587 or larger than 4.587. More generally, this shows that the t-statistic, i.e., the ratio \(b_k/\sqrt{v_k s^2}\), is the test statistic associated with the null hypothesis: \[ H_0: \beta_k=0. \] Under the null hypothesis, the test statistic follows a Student-t distribution with \(n-K\) degrees of freedom. The t-statistic is therefore of particular importance, and, as a result, it is routinely reported in regression outputs (see Example 1.2).

Example 1.2 (Education and income) Consider regression that aims at determining covariates of households’ income. This example makes use of data from the Swiss Household Panel (SHP); edyear19 is the number of years of education and age19 is the age of the respondent, as of 2019.

library(AEC)

library(sandwich)

shp$income <- shp$i19ptotn/1000

shp$female <- 1*(shp$sex19==2)

eq <- lm(income ~ edyear19 + age19 + I(age19^2) + female,data=shp)

lmtest::coeftest(eq)##

## t test of coefficients:

##

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -71.9738073 5.7082456 -12.609 < 2.2e-16 ***

## edyear19 4.8442661 0.2172320 22.300 < 2.2e-16 ***

## age19 3.2386215 0.2183812 14.830 < 2.2e-16 ***

## I(age19^2) -0.0289498 0.0020915 -13.842 < 2.2e-16 ***

## female -31.8089006 1.4578004 -21.820 < 2.2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The last two columns of the previous table give the t-statistic and the p-values associated with t-tests, whose size-\(\alpha\) critical region is: \[ \left]-\infty,-\Phi^{-1}_{t(n-K)}\left(1-\frac{\alpha}{2}\right)\right] \cup \left[\Phi^{-1}_{t(n-K)}\left(1-\frac{\alpha}{2}\right),+\infty\right[. \]

We recall that the p-value is defined as the probability that \(|Z| > |t|\), where \(t\) is the (computed) t-statistics and where \(Z \sim t(n-K)\). That is, in the context of the t-test, the p-value is given by \(2(1 - \Phi_{t(n-K)}(|t_k|))\). See this webpage for details regarding the link between critical regions, p-value, and test outcomes.

Now, suppose we want to compute a (symmetrical) confidence interval \([I_{d,1-\alpha},I_{u,1-\alpha}]\) that is such that \(\mathbb{P}(\beta_k \in [I_{d,1-\alpha},I_{u,1-\alpha}])=1-\alpha\). That is, we want to have: \(\mathbb{P}(\beta_k < I_{d,1-\alpha})=\frac{\alpha}{2}\) and \(\mathbb{P}(\beta_k > I_{u,1-\alpha})=\frac{\alpha}{2}\). Let us focus on \(I_{d,1-\alpha}\) to start with. Using Eq. (1.10), i.e., \(t_k = \frac{b_k - \beta_k}{\sqrt{s^2v_k}} \sim t(n-K)\), we have: \[\begin{eqnarray*} \mathbb{P}(\beta_k < I_{d,1-\alpha})=\frac{\alpha}{2} &\Leftrightarrow& \\ \mathbb{P}\left(\frac{b_k - \beta_k}{\sqrt{s^2v_k}} > \frac{b_k - I_{d,1-\alpha}}{\sqrt{s^2v_k}}\right)=\frac{\alpha}{2} &\Leftrightarrow& \mathbb{P}\left(t_k > \frac{b_k - I_{d,1-\alpha}}{\sqrt{s^2v_k}}\right)=\frac{\alpha}{2} \Leftrightarrow\\ 1 - \mathbb{P}\left(t_k \le \frac{b_k - I_{d,1-\alpha}}{\sqrt{s^2v_k}}\right)=\frac{\alpha}{2} &\Leftrightarrow& \frac{b_k - I_{d,1-\alpha}}{\sqrt{s^2v_k}} = \Phi^{-1}_{t(n-K)}\left(1-\frac{\alpha}{2}\right), \end{eqnarray*}\] where \(\Phi_{t(n-K)}(\alpha)\) is the c.d.f. of the \(t(n-K)\) distribution (Table 8.2).

Doing the same for \(I_{u,1-\alpha}\), we obtain: \[\begin{eqnarray*} &&[I_{d,1-\alpha},I_{u,1-\alpha}] =\\ &&\left[b_k - \Phi^{-1}_{t(n-K)}\left(1-\frac{\alpha}{2}\right)\sqrt{s^2v_k},b_k + \Phi^{-1}_{t(n-K)}\left(1-\frac{\alpha}{2}\right)\sqrt{s^2v_k}\right]. \end{eqnarray*}\]

Using the results presented in Example 1.2, we can compute lower and upper bounds of 95% confidence intervals for the estimated parameters as follows:

n <- length(eq$residuals); K <- length(eq$coefficients)

lower.b <- eq$coefficients + qt(.025,df=n-K)*sqrt(diag(vcov(eq)))

upper.b <- eq$coefficients + qt(.975,df=n-K)*sqrt(diag(vcov(eq)))

cbind(lower.b,upper.b)## lower.b upper.b

## (Intercept) -83.16413225 -60.78348237

## edyear19 4.41840914 5.27012310

## age19 2.81051152 3.66673148

## I(age19^2) -0.03304986 -0.02484977

## female -34.66674188 -28.951059321.2.5 Testing a set of linear restrictions

We sometimes want to test if a set of restrictions are jointly consistent with the data at hand. Let us formalize such a set of (\(J\)) linear restrictions: \[\begin{equation}\label{eq:restrictions} \begin{array}{ccc} r_{1,1} \beta_1 + \dots + r_{1,K} \beta_K &=& q_1\\ \vdots && \vdots\\ r_{J,1} \beta_1 + \dots + r_{J,K} \beta_K &=& q_J. \end{array} \end{equation}\] In matrix form, we get: \[\begin{equation} \mathbf{R}\boldsymbol\beta = \mathbf{q}. \end{equation}\]

Define the discrepancy vector \(\mathbf{m} = \mathbf{R}\mathbf{b} - \mathbf{q}\). Under the null hypothesis: \[\begin{eqnarray*} \mathbb{E}(\mathbf{m}|\mathbf{X}) &=& \mathbf{R}\boldsymbol\beta - \mathbf{q} = 0 \quad \mbox{and} \\ \mathbb{V}ar(\mathbf{m}|\mathbf{X}) &=& \mathbf{R} \mathbb{V}ar(\mathbf{b}|\mathbf{X}) \mathbf{R}'. \end{eqnarray*}\]

With these notations, the assumption to test is: \[\begin{equation} \boxed{H_0: \mathbf{R}\boldsymbol\beta - \mathbf{q} = 0 \mbox{ against } H_1: \mathbf{R}\boldsymbol\beta - \mathbf{q} \ne 0.}\tag{1.12} \end{equation}\]

Under Hypotheses 1.1 to 1.4, we have \(\mathbb{V}ar(\mathbf{m}|\mathbf{X}) = \sigma^2 \mathbf{R} (\mathbf{X}'\mathbf{X})^{-1} \mathbf{R}'\) (see Prop. 1.3). If we add the normality assumption (Hypothesis 1.5), we have: \[\begin{equation} W = \mathbf{m}'\mathbb{V}ar(\mathbf{m}|\mathbf{X})^{-1}\mathbf{m} \sim \chi^2(J). \tag{1.13} \end{equation}\]

If \(\sigma^2\) was known, we could then conduct a Wald test (directly exploiting Eq. (1.13)). But this is not the case in practice and we cannot compute \(W\). We can, however, approximate it be replacing \(\sigma^2\) by \(s^2\) (given in Eq. (1.9)). The distribution of this new statistic is not \(\chi^2(J)\) any more; it is an \(\mathcal{F}\) distribution (whose quantiles are shown in Table 8.4), and the test is called \(F\) test.

Proposition 1.9 Under Hypotheses 1.1 to 1.5 and if Eq. (1.12) holds, we have: \[\begin{equation} F = \frac{W}{J}\frac{\sigma^2}{s^2} = \frac{\mathbf{m}'(\mathbf{R}(\mathbf{X}'\mathbf{X})^{-1}\mathbf{R}')^{-1}\mathbf{m}}{s^2J} \sim \mathcal{F}(J,n-K),\tag{1.14} \end{equation}\] where \(\mathcal{F}\) is the distribution of the F-statistic (see Def. 8.11).

Proof. According to Eq. (1.13), \(W/J \sim \chi^2(J)/J\). Moreover, the denominator (\(s^2/\sigma^2\)) is \(\sim \chi^2(n-K)\). Therefore, \(F\) is the ratio of a r.v. distributed as \(\chi^2(J)/J\) and another distributed as \(\chi^2(n-K)/(n-K)\). It remains to verify that these r.v. are independent. Under \(H_0\), we have \(\mathbf{m} = \mathbf{R}(\mathbf{b}-\boldsymbol\beta) = \mathbf{R}(\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}'\boldsymbol\varepsilon\). Therefore \(\mathbf{m}'(\mathbf{R}(\mathbf{X}'\mathbf{X})^{-1}\mathbf{R}')^{-1}\mathbf{m}\) is of the form \(\boldsymbol\varepsilon'\mathbf{T}\boldsymbol\varepsilon\) with \(\mathbf{T}=\mathbf{D}'\mathbf{C}\mathbf{D}\) where \(\mathbf{D}=\mathbf{R}(\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}'\) and \(\mathbf{C}=(\mathbf{R}(\mathbf{X}'\mathbf{X})^{-1}\mathbf{R}')^{-1}\). Under Hypotheses 1.1 to 1.4, the covariance between \(\mathbf{T}\boldsymbol\varepsilon\) and \(\mathbf{M}\boldsymbol\varepsilon\) is \(\sigma^2\mathbf{T}\mathbf{M} = \mathbf{0}\). Therefore, under 1.5, these variables are Gaussian variables with 0 covariance. Hence they are independent.

For large \(n-K\), the \(\mathcal{F}_{J,n-K}\) distribution converges to \(\mathcal{F}_{J,\infty}=\chi^2(J)/J\). This implies that, in large samples, the F-statistic approximately has a \(\chi^2\) distribution. In other words, one can approximately employ Eq. (1.13) to perform a Wald test (one just has to replace \(\sigma^2\) with \(s^2\) when computing \(\mathbb{V}ar(\mathbf{m}|\mathbf{X})\)).

The following proposition proposes another equivalent computation of the F-statistic, based on the \(R^2\) of the restricted and unrestricted linear models.

Proposition 1.10 The F-statistic defined by Eq. (1.14) is also equal to: \[\begin{equation} F = \frac{(R^2-R_*^2)/J}{(1-R^2)/(n-K)} = \frac{(SSR_{restr}-SSR_{unrestr})/J}{SSR_{unrestr}/(n-K)},\tag{1.15} \end{equation}\] where \(R_*^2\) is the coef. of determination (Eq. (1.5)) of the “restricted regression” (SSR: sum of squared residuals.)

Proof. Let’s denote by \(\mathbf{e}_*=\mathbf{y}-\mathbf{X}\mathbf{b}_*\) the vector of residuals associated to the restricted regression (i.e. \(\mathbf{R}\mathbf{b}_*=\mathbf{q}\)). We have \(\mathbf{e}_*=\mathbf{e} - \mathbf{X}(\mathbf{b}_*-\mathbf{b})\). Using \(\mathbf{e}'\mathbf{X}=0\), we get \(\mathbf{e}_*'\mathbf{e}_*=\mathbf{e}'\mathbf{e} + (\mathbf{b}_*-\mathbf{b})'\mathbf{X}'\mathbf{X}(\mathbf{b}_*-\mathbf{b}) \ge \mathbf{e}'\mathbf{e}\).

By Proposition 8.5 (in Appendix 8.1), we have: \(\mathbf{b}_*-\mathbf{b}=-(\mathbf{X}'\mathbf{X})^{-1} \mathbf{R}'\{\mathbf{R}(\mathbf{X}'\mathbf{X})^{-1}\mathbf{R}'\}^{-1}(\mathbf{R}\mathbf{b} - \mathbf{q})\). Therefore: \[ \mathbf{e}_*'\mathbf{e}_* - \mathbf{e}'\mathbf{e} = (\mathbf{R}\mathbf{b} - \mathbf{q})'[\mathbf{R}(\mathbf{X}'\mathbf{X})^{-1}\mathbf{R}']^{-1}(\mathbf{R}\mathbf{b} - \mathbf{q}). \] This implies that the F statistic defined in Prop. 1.9 is also equal to: \[ \frac{(\mathbf{e}_*'\mathbf{e}_* - \mathbf{e}'\mathbf{e})/J}{\mathbf{e}'\mathbf{e}/(n-K)}, \] which leads to the result.

The null hypothesis \(H_0\) (Eq. (1.12)) of the F-test is rejected if \(F\) —defined by Eq. (1.14) or (1.15)— is higher than \(\mathcal{F}_{1-\alpha}(J,n-K)\). (Hence, this test is a one-sided test.)

1.2.6 Large Sample Properties

Even if we relax the normality assumption (Hypothesis 1.5), we can approximate the finite-sample behavior of the estimators by using large-sample or asymptotic properties.

To begin with, we proceed under Hypothesis 1.1 to 1.4. (We will see, later on, how to deal with —partial— relaxations of Hypothesis 1.3 and 1.4.)

Under regularity assumptions, and under Hypotheses 1.1 to 1.4, even if the residuals are not normally-distributed, the least square estimators can be asymptotically normal and inference can be performed in the same way as in small samples when Hypotheses 1.1 to 1.5 hold. This derives from Prop. 1.11 (below). The F-test (Prop. 1.10) and the t-test (Eq. (1.10)) can then be performed.

Proposition 1.11 Under Assumptions 1.1 to 1.4, and assuming further that: \[\begin{equation} Q = \mbox{plim}_{n \rightarrow \infty} \frac{\mathbf{X}'\mathbf{X}}{n},\tag{1.16} \end{equation}\] and that the \((\mathbf{x}_i,\varepsilon_i)\)’s are independent (across entities \(i\)), we have: \[\begin{equation} \sqrt{n}(\mathbf{b} - \boldsymbol\beta)\overset{d} {\rightarrow} \mathcal{N}\left(0,\sigma^2Q^{-1}\right).\tag{1.17} \end{equation}\]

Proof. Since \(\mathbf{b} = \boldsymbol\beta + \left( \frac{\mathbf{X}'\mathbf{X}}{n}\right)^{-1}\left(\frac{\mathbf{X}'\boldsymbol\varepsilon}{n}\right)\), we have: \(\sqrt{n}(\mathbf{b} - \boldsymbol\beta) = \left( \frac{\mathbf{X}'\mathbf{X}}{n}\right)^{-1} \left(\frac{1}{\sqrt{n}}\right)\mathbf{X}'\boldsymbol\varepsilon\). Since \(f:A \rightarrow A^{-1}\) is a continuous function (for \(A \ne \mathbf{0}\)), \(\mbox{plim}_{n \rightarrow \infty} \left(\frac{\mathbf{X}'\mathbf{X}}{n}\right)^{-1} = \mathbf{Q}^{-1}\) (see Prop. 8.12). Let us denote by \(V_i\) the vector \(\mathbf{x}_i \varepsilon_i\). Because the \((\mathbf{x}_i,\varepsilon_i)\)’s are independent, the \(V_i\)’s are independent as well. Their covariance matrix is \(\sigma^2\mathbb{E}(\mathbf{x}_i \mathbf{x}_i')=\sigma^2Q\). Applying the multivariate central limit theorem on vectors \(V_i\) gives \(\sqrt{n}\left(\frac{1}{n}\sum_{i=1}^n \mathbf{x}_i \varepsilon_i\right) = \left(\frac{1}{\sqrt{n}}\right)\mathbf{X}'\boldsymbol\varepsilon \overset{d}{\rightarrow} \mathcal{N}(0,\sigma^2Q)\). An application of Slutsky’s theorem (Prop. 8.12) then leads to the results.

In practice, \(\sigma^2\) is approximated by \(s^2=\frac{\mathbf{e}'\mathbf{e}}{n-K}\) (Eq. (1.9)) and \(\mathbf{Q}^{-1}\) by \(\left(\frac{\mathbf{X}'\mathbf{X}}{n}\right)^{-1}\). That is, the covariance matrix of the estimator is approximated by: \[\begin{equation} \boxed{\widehat{\mathbb{V}ar}(\mathbf{b}) = s^2 (\mathbf{X}'\mathbf{X})^{-1}.}\tag{1.18} \end{equation}\]

Eqs. (1.16) and (1.17) respectively correspond to convergences in probability and in distribution (see Definitions 8.16 and 8.19, respectively).

1.3 Common pitfalls in linear regressions

1.3.1 Multicollinearity

Consider the model: \(y_i = \beta_1 x_{i,1} + \beta_2 x_{i,2} + \varepsilon_i\), where all variables are zero-mean and \(\mathbb{V}ar(\varepsilon_i)=\sigma^2\). We have: \[ \mathbf{X}'\mathbf{X} = \left[ \begin{array}{cc} \sum_i x_{i,1}^2 & \sum_i x_{i,1} x_{i,2} \\ \sum_i x_{i,1} x_{i,2} & \sum_i x_{i,2}^2 \end{array}\right], \] therefore: \[\begin{eqnarray*} (\mathbf{X}'\mathbf{X})^{-1} &=& \frac{1}{\sum_i x_{i,1}^2\sum_i x_{i,2}^2 - (\sum_i x_{i,1} x_{i,2})^2} \left[ \begin{array}{cc} \sum_i x_{i,2}^2 & -\sum_i x_{i,1} x_{i,2} \\ -\sum_i x_{i,1} x_{i,2} & \sum_i x_{i,1}^2 \end{array}\right]. \end{eqnarray*}\] The inverse of the upper-left parameter of \((\mathbf{X}'\mathbf{X})^{-1}\) is: \[\begin{equation} \sum_i x_{i,1}^2 - \frac{(\sum_i x_{i,1} x_{i,2})^2}{\sum_i x_{i,2}^2} = \sum_i x_{i,1}^2(1 - correl_{1,2}^2),\tag{1.19} \end{equation}\] where \(correl_{1,2}\) is the sample correlation between \(\mathbf{x}_{1}\) and \(\mathbf{x}_{2}\).

Hence, the closer to one \(correl_{1,2}\), the higher the variance of \(b_1\) (recall that the variance of \(b_1\) is the upper-left component of \(\sigma^2(\mathbf{X}'\mathbf{X})^{-1}\)). That is, if some of our regressors are close to a linear conbination of the other ones, then the confidence intervals will tend to be wide, which typically reduces the power of the t-test (we tend to fail to reject the null hypothesis that the coefficients are different from zero).

1.3.2 Omitted variables

Consider the following model (the “True model”): \[ \mathbf{y} = \underbrace{\mathbf{X}_1}_{n \times K_1}\underbrace{\boldsymbol\beta_1}_{K_1 \times 1} + \underbrace{\mathbf{X}_2}_{n\times K_2}\underbrace{\boldsymbol\beta_2}_{K_2 \times 1} + \boldsymbol\varepsilon \] If one computes \(\mathbf{b}_1\) by regressing \(\mathbf{y}\) on \(\mathbf{X}_1\) only, one gets: \[ \mathbf{b}_1 = (\mathbf{X}_1'\mathbf{X}_1)^{-1}\mathbf{X}_1'\mathbf{y} = \boldsymbol\beta_1 + (\mathbf{X}_1'\mathbf{X}_1)^{-1}\mathbf{X}_1'\mathbf{X}_2\boldsymbol\beta_2 + (\mathbf{X}_1'\mathbf{X}_1)^{-1}\mathbf{X}_1'\boldsymbol\varepsilon. \]

This results in the omitted-variable formula: \[ \mathbb{E}(\mathbf{b}_1|\mathbf{X}) = \boldsymbol\beta_1 + \underbrace{(\mathbf{X}_1'\mathbf{X}_1)^{-1}(\mathbf{X}_1'\mathbf{X}_2)}_{K_1 \times K_2}\boldsymbol\beta_2. \] (Each column of \((\mathbf{X}_1'\mathbf{X}_1)^{-1}(\mathbf{X}_1'\mathbf{X}_2)\) are the OLS regressors obtained when regressing the columns of \(\mathbf{X}_2\) on \(\mathbf{X}_1\).) Unless the variables included in \(\mathbf{X}_1\) are orthogonal to those in \(\mathbf{X}_2\), we obtain a bias. A way to address this potential pitfall is to introduce “controls” in the specification.

Example 1.3 Let us use the California Test Score dataset (in the package AER). Assume we want to measure the effect of the students-to-teacher ratio (str) on student test scores (testscr). The following regressions show that the effect is lower when controls are added.

library(AER); data("CASchools")

CASchools$str <- CASchools$students/CASchools$teachers

CASchools$testscr <- .5 * (CASchools$math + CASchools$read)

eq1 <- lm(testscr~str,data=CASchools)

eq2 <- lm(testscr~str+lunch,data=CASchools)

eq3 <- lm(testscr~str+lunch+english,data=CASchools)

stargazer::stargazer(eq1,eq2,eq3,type="text",

no.space = TRUE,omit.stat=c("f","ser"))##

## =============================================

## Dependent variable:

## --------------------------------

## testscr

## (1) (2) (3)

## ---------------------------------------------

## str -2.280*** -1.117*** -0.998***

## (0.480) (0.240) (0.239)

## lunch -0.600*** -0.547***

## (0.017) (0.022)

## english -0.122***

## (0.032)

## Constant 698.933*** 702.911*** 700.150***

## (9.467) (4.700) (4.686)

## ---------------------------------------------

## Observations 420 420 420

## R2 0.051 0.767 0.775

## Adjusted R2 0.049 0.766 0.773

## =============================================

## Note: *p<0.1; **p<0.05; ***p<0.011.3.3 Irrelevant variable

Consider the true model: \[ \mathbf{y} = \mathbf{X}_1\boldsymbol\beta_1 + \boldsymbol\varepsilon, \] while the estimated model is: \[ \mathbf{y} = \mathbf{X}_1\boldsymbol\beta_1 + \mathbf{X}_2\boldsymbol\beta_2 + \boldsymbol\varepsilon \]

The estimates are unbiased. However, adding irrelevant explanatory variables increases the variance of the estimate of \(\boldsymbol\beta_1\) (compared to the case where one uses the correct explanatory variables). This is the case unless the correlation between \(\mathbf{X}_1\) and \(\mathbf{X}_2\) is null, see Eq. (1.19).

In other words, the estimator is inefficient, i.e., there exists an alternative consistent estimator whose variance is lower. The inefficiency problem can have serious consequences when testing hypotheses such as \(H_0: \beta_1 = 0\). Due to the loss of power, we might wrongly infer that the \(\mathbf{X}_1\) variables are not “relevant” (Type-II error, False Negative).

1.4 Instrumental Variables

The conditional mean zero assumption (Hypothesis 1.2), according to which \(\mathbb{E}(\boldsymbol\varepsilon|\mathbf{X})=0\) —which implies in particular that \(\mathbf{x}_i\) and \(\varepsilon_i\) are uncorrelated— is sometimes not consistent with the considered economic framework. When it is the case, the parameters of interest may still be estimated consistently by resorting to instrumental variable techniques.

Consider the following model: \[\begin{equation} y_i = \mathbf{x_i}'\boldsymbol\beta + \varepsilon_i, \quad \mbox{where } \mathbb{E}(\varepsilon_i)=0 \mbox{ and } \mathbf{x_i}\not\perp \varepsilon_i.\tag{1.20} \end{equation}\]

Let us illustrate how this situation may result in biased OLS estimate. Consider for instance the situation where: \[\begin{equation} \mathbb{E}(\varepsilon_i)=0 \quad \mbox{and} \quad \mathbb{E}(\varepsilon_i \mathbf{x_i})=\boldsymbol\gamma,\tag{1.21} \end{equation}\] in which case we have \(\mathbf{x}_i\not\perp \varepsilon_i\) (consistently with Eq. (1.20)).

By the law of large numbers, \(\mbox{plim}_{n \rightarrow \infty} \mathbf{X}'\boldsymbol\varepsilon / n = \boldsymbol\gamma\). If \(\mathbf{Q}_{xx} := \mbox{plim } \mathbf{X}'\mathbf{X}/n\), the OLS estimator is not consistent because \[ \mathbf{b} = \boldsymbol\beta + (\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}'\boldsymbol\varepsilon \overset{p}{\rightarrow} \boldsymbol\beta + \mathbf{Q}_{xx}^{-1}\boldsymbol\gamma \ne \boldsymbol\beta. \]

Let us now introduce the notion of instruments.

Definition 1.2 (Instrumental variables) The \(L\)-dimensional random variable \(\mathbf{z}_i\) is a valid set of instruments if:

- \(\mathbf{z}_i\) is correlated to \(\mathbf{x}_i\);

- we have \(\mathbb{E}(\boldsymbol\varepsilon|\mathbf{Z})=0\) and

- the orthogonal projections of the \(\mathbf{x}_i\)’s on the \(\mathbf{z}_i\)’s are not multicollinear.

Point c implies in particular that the dimension of \(\mathbf{z}_i\) has to be at least as large as that of \(\mathbf{x}_i\). If \(\mathbf{z}_i\) is a valid set of instruments, we have: \[ \mbox{plim}\left( \frac{\mathbf{Z}'\mathbf{y}}{n} \right) =\mbox{plim}\left( \frac{\mathbf{Z}'(\mathbf{X}\boldsymbol\beta + \boldsymbol\varepsilon)}{n} \right) = \mbox{plim}\left( \frac{\mathbf{Z}'\mathbf{X}}{n} \right)\boldsymbol\beta. \] Indeed, by the law of large numbers, \(\frac{\mathbf{Z}'\boldsymbol\varepsilon}{n} \overset{p}{\rightarrow}\mathbb{E}(\mathbf{z}_i\varepsilon_i)=0\).

If \(L = K\), the matrix \(\frac{\mathbf{Z}'\mathbf{X}}{n}\) is of dimension \(K \times K\) and we have: \[ \left[\mbox{plim }\left( \frac{\mathbf{Z}'\mathbf{X}}{n} \right)\right]^{-1}\mbox{plim }\left( \frac{\mathbf{Z}'\mathbf{y}}{n} \right) = \boldsymbol\beta. \] By continuity of the inverse function (everywhere but at 0): \(\left[\mbox{plim }\left( \frac{\mathbf{Z}'\mathbf{X}}{n} \right)\right]^{-1}=\mbox{plim }\left( \frac{\mathbf{Z}'\mathbf{X}}{n} \right)^{-1}\). The Slutsky Theorem (Prop. 8.12) further implies that: \[ \mbox{plim }\left( \frac{\mathbf{Z}'\mathbf{X}}{n} \right)^{-1} \mbox{plim }\left( \frac{\mathbf{Z}'\mathbf{y}}{n} \right) = \mbox{plim }\left( \left( \frac{\mathbf{Z}'\mathbf{X}}{n} \right)^{-1} \frac{\mathbf{Z}'\mathbf{y}}{n} \right). \] Hence \(\mathbf{b}_{iv}\) is consistent if it is defined by: \[ \boxed{\mathbf{b}_{iv} = (\mathbf{Z}'\mathbf{X})^{-1}\mathbf{Z}'\mathbf{y}.} \]

Proposition 1.12 (Asymptotic distribution of the IV estimator) If \(\mathbf{z}_i\) is a \(L\)-dimensional random variable that constitutes a valid set of instruments (see Def. 1.2) and if \(L=K\), then the asymptotic distribution of \(\mathbf{b}_{iv}\) is: \[ \mathbf{b}_{iv} \overset{d}{\rightarrow} \mathcal{N}\left(\boldsymbol\beta,\frac{\sigma^2}{n}\left[Q_{xz}Q_{zz}^{-1}Q_{zx}\right]^{-1}\right) \] where \(\mbox{plim } \mathbf{Z}'\mathbf{Z}/n =: \mathbf{Q}_{zz}\), \(\mbox{plim } \mathbf{Z}'\mathbf{X}/n =: \mathbf{Q}_{zx}\), \(\mbox{plim } \mathbf{X}'\mathbf{Z}/n =: \mathbf{Q}_{xz}\).

Proof. The proof is very similar to that of Prop. 1.11, the starting point being that \(\mathbf{b}_{iv} = \boldsymbol\beta + (\mathbf{Z}'\mathbf{X})^{-1}\mathbf{Z}'\boldsymbol\varepsilon\).

When \(L=K\), we have: \[ \left[Q_{xz}Q_{zz}^{-1}Q_{zx}\right]^{-1}=Q_{zx}^{-1}Q_{zz}Q_{xz}^{-1}. \] In practice, to estimate \(\mathbb{V}ar(\mathbf{b}_{iv}) = \frac{\sigma^2}{n}Q_{zx}^{-1}Q_{zz}Q_{xz}^{-1}\), we replace \(\sigma^2\) by: \[ s_{iv}^2 = \frac{1}{n}\sum_{i=1}^{n} (y_i - \mathbf{x}_i'\mathbf{b}_{iv})^2. \]

What about when \(L > K\)? In this case, we proceed as follows:

- Regress \(\mathbf{X}\) on the space spanned by \(\mathbf{Z}\) and

- Regress \(\mathbf{y}\) on the fitted values \(\hat{\mathbf{X}}:=\mathbf{Z}(\mathbf{Z}'\mathbf{Z})^{-1}\mathbf{Z}'\mathbf{X}\).

These two-step approach is called Two-Stage Least Squares (2SLS). It results in: \[\begin{equation} \boxed{\mathbf{b}_{iv} = [\mathbf{X}'\mathbf{Z}(\mathbf{Z}'\mathbf{Z})^{-1}\mathbf{Z}'\mathbf{X}]^{-1}\mathbf{X}'\mathbf{Z}(\mathbf{Z}'\mathbf{Z})^{-1}\mathbf{Z}'\mathbf{Y}.} \tag{1.22} \end{equation}\]

In this case, Prop. 1.12 still holds, with \(\mathbf{b}_{iv}\) given by Eq. (1.22).

If the instruments do not properly satisfy Condition (a) in Def. 1.2 (i.e. if \(\mathbf{x}_i\) and \(\mathbf{z}_i\) are only loosely related), the instruments are said to be weak (see, e.g., J. H. Stock and Yogo (2005), available here or Andrews, Stock, and Sun (2019)). A simple standard way to test for weak instruments consist in looking at the F-statistic associated with the first stage of the estimation. The easier it is to reject the null hypothesis (large test statistic), the less weak —or the stronger— the instruments.

The Durbin-Wu-Hausman test (Durbin (1954), Wu (1973), Hausman (1978)) can be used to test if IV necessary. (IV techniques are required if \(\mbox{plim}_{n \rightarrow \infty} \mathbf{X}'\boldsymbol\varepsilon / n \ne 0\).) Hausman (1978) proposes a test of the efficiency of estimators. Under the null hypothesis two estimators, \(\mathbf{b}_0\) and \(\mathbf{b}_1\), are consistent but \(\mathbf{b}_0\) is (asymptotically) efficient relative to \(\mathbf{b}_1\). Under the alternative hypothesis, \(\mathbf{b}_1\) (IV in the present case) remains consistent but not \(\mathbf{b}_0\) (OLS in the present case). That is, when we reject the null hypothesis, it means that the OLS estimator is not consistent, potentially due to endogeneity issue.

The test statistic is: \[ H = (\mathbf{b}_1 - \mathbf{b}_0)' MPI(\mathbb{V}ar(\mathbf{b}_1) - \mathbb{V}ar(\mathbf{b}_0))(\mathbf{b}_1 - \mathbf{b}_0), \] where \(MPI\) is the Moore-Penrose pseudo-inverse. Under the null hypothesis, \(H \sim \chi^2(q)\), where \(q\) is the rank of \(\mathbb{V}ar(\mathbf{b}_1) - \mathbb{V}ar(\mathbf{b}_0)\).

Example 1.4 (Estimation of price elasticity) See e.g. WHO and estimation of tobacco price elasticity of demand.

We want to estimate what is the effect on demand of an exogenous increase in prices of cigarettes (say).

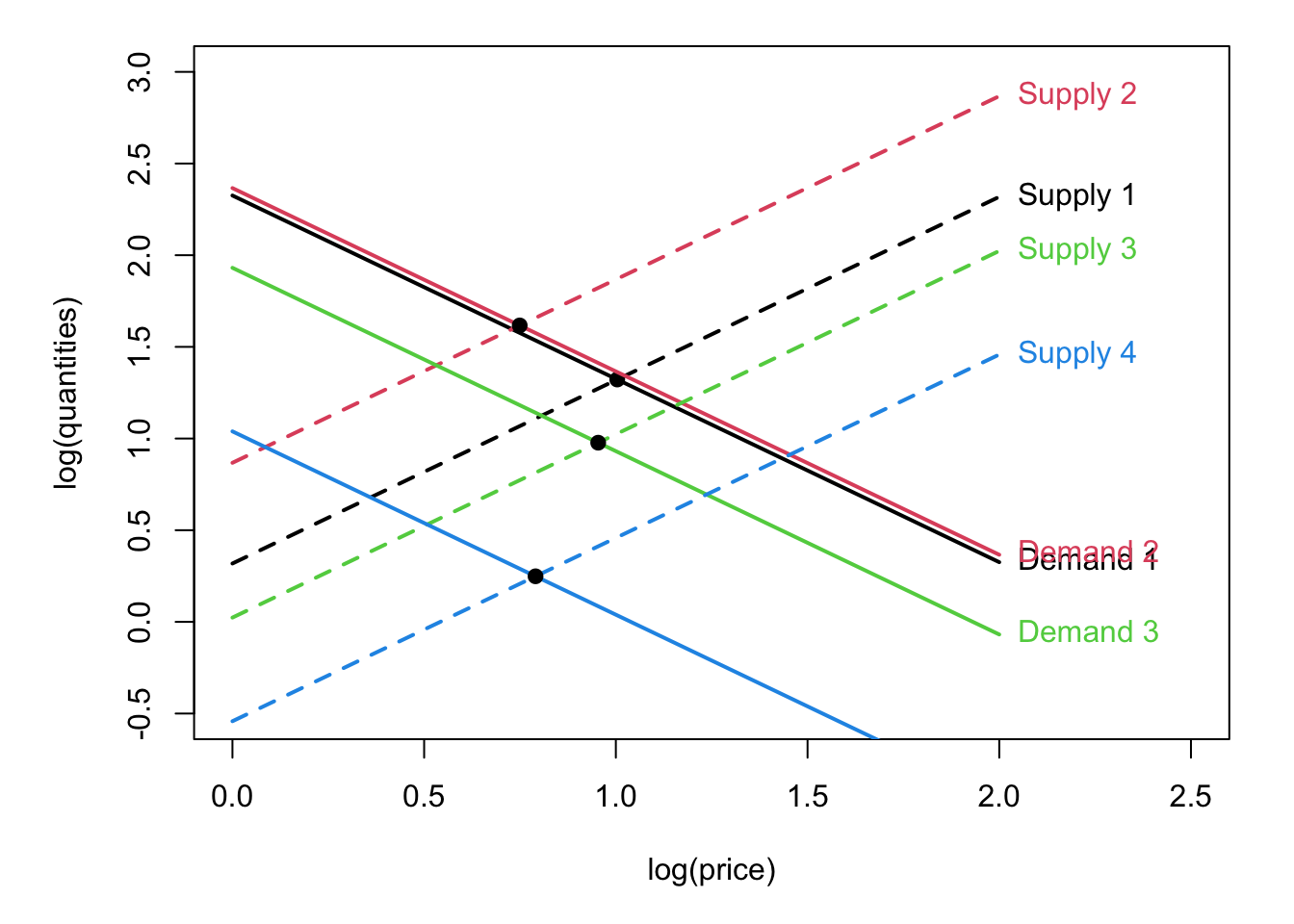

The model is: \[\begin{eqnarray*} \underbrace{q^d_t}_{\mbox{log(demand)}} &=& \alpha_0 + \alpha_1 \underbrace{\times p_t}_{\mbox{log(price)}} + \alpha_2 \underbrace{\times w_t}_{\mbox{income}} + \varepsilon_t^d\\ \underbrace{q^s_t}_{\mbox{log(supply)}} &=& \gamma_0 + \gamma_1 \times p_t + \gamma_2 \underbrace{\times \mathbf{y}_t}_{\mbox{cost factors}} + \varepsilon_t^s, \end{eqnarray*}\] where \(\mathbf{y}_t\), \(w_t\), \(\varepsilon_t^s \sim \mathcal{N}(0,\sigma^2_s)\) and \(\varepsilon_t^d \sim \mathcal{N}(0,\sigma^2_d)\) are independent.

Equilibrium: \(q^d_t = q^s_t\). This implies that prices are endogenous: \[ p_t = \frac{\alpha_0 + \alpha_2 w_t + \varepsilon_t^d - \gamma_0 - \gamma_2 \mathbf{y}_t - \varepsilon_t^s}{\gamma_1 - \alpha_1}. \] In particular we have \(\mathbb{E}(p_t \varepsilon_t^d) = \frac{\sigma^2_d}{\gamma_1 - \alpha_1} \ne 0\) \(\Rightarrow\) Regressing by OLS \(q_t^d\) on \(p_t\) gives biased estimates (see Eq. (1.21)).

Figure 1.5: This figure illustrates the situation prevailing when estimating a price-elasticity (and the price is endogenous).

Let us use IV regressions to estimate the price elasticity of cigarette demand. For that purpose, we use the CigarettesSW dataset of package AER (these data are used by J. Stock and Watson (2003)). This panel dataset documents cigarette consumption for the 48 continental US States from 1985–1995. The instrument is the real tax on cigarettes arising from the state’s general sales tax. The rationale is that larger general sales tax drives cigarette prices up, but the general tax is not determined by other forces affecting \(\varepsilon_t^d\).

data("CigarettesSW", package = "AER")

CigarettesSW$rprice <- with(CigarettesSW, price/cpi)

CigarettesSW$rincome <- with(CigarettesSW, income/population/cpi)

CigarettesSW$tdiff <- with(CigarettesSW, (taxs - tax)/cpi)

## model

eq.IV1 <- ivreg(log(packs) ~ log(rprice) + log(rincome) |

log(rincome) + tdiff + I(tax/cpi),

data = CigarettesSW, subset = year == "1995")

eq.IV2 <- ivreg(log(packs) ~ log(rprice) | tdiff,

data = CigarettesSW, subset = year == "1995")

eq.no.IV <- lm(log(packs) ~ log(rprice) + log(rincome),

data = CigarettesSW, subset = year == "1995")

stargazer::stargazer(eq.no.IV,eq.IV1,eq.IV2,type="text",no.space = TRUE,

omit.stat=c("f","ser"))##

## ==========================================

## Dependent variable:

## -----------------------------

## log(packs)

## OLS instrumental

## variable

## (1) (2) (3)

## ------------------------------------------

## log(rprice) -1.407*** -1.277*** -1.084***

## (0.251) (0.263) (0.317)

## log(rincome) 0.344 0.280

## (0.235) (0.239)

## Constant 10.342*** 9.895*** 9.720***

## (1.023) (1.059) (1.514)

## ------------------------------------------

## Observations 48 48 48

## R2 0.433 0.429 0.401

## Adjusted R2 0.408 0.404 0.388

## ==========================================

## Note: *p<0.1; **p<0.05; ***p<0.01

summary(eq.IV1,diagnostics = TRUE)$diagnostics## df1 df2 statistic p-value

## Weak instruments 2 44 244.7337536 1.444054e-24

## Wu-Hausman 1 44 3.0678163 8.682505e-02

## Sargan 1 NA 0.3326221 5.641191e-01The last three tests are interpreted as follows:

- Since the p-value of the first test is small, we reject the null hypothesis according to which the instrument is weak.

- The small p-value of the Wu-Hausman test implies that we reject the null hypothesis according to which the OLS estimates are consistent (at the 10% level only, though).

- No over-identification (misspecification) is detected by the Sargan test (large p-value).

Example 1.5 (Education and wage) In this example, we make use of another dataset proposed by J. Stock and Watson (2003), namely the CollegeDistance dataset.3 the objective is to estimate the effect of education on wages. Education choice is suspected to be an endogenous variable, which calls for an IV strategy. The instrumental variable is the distance to college (see, e.g., Dee (2004)).

library(sem)

data("CollegeDistance", package = "AER")

eq.1st.stage <- lm(education ~ urban + gender + ethnicity + unemp + distance,

data = CollegeDistance)

CollegeDistance$ed.pred<- predict(eq.1st.stage)

eq.2nd.stage <- lm(wage ~ urban + gender + ethnicity + unemp + ed.pred,

data = CollegeDistance)

eqOLS <- lm(wage ~ urban + gender + ethnicity + unemp + education,

data=CollegeDistance)

eq2SLS <- ivreg(wage ~ urban + gender + ethnicity + unemp + education|

urban + gender + ethnicity + unemp + distance,

data=CollegeDistance)

stargazer::stargazer(eq.1st.stage,eq.2nd.stage,eq2SLS,eqOLS,

type="text",no.space = TRUE,omit.stat = c("f","ser"))##

## ============================================================

## Dependent variable:

## ------------------------------------------

## education wage

## OLS OLS instrumental OLS

## variable

## (1) (2) (3) (4)

## ------------------------------------------------------------

## urbanyes -0.092 0.046 0.046 0.070

## (0.065) (0.045) (0.060) (0.045)

## genderfemale -0.025 -0.071* -0.071 -0.085**

## (0.052) (0.037) (0.050) (0.037)

## ethnicityafam -0.524*** -0.227*** -0.227** -0.556***

## (0.072) (0.073) (0.099) (0.052)

## ethnicityhispanic -0.275*** -0.351*** -0.351*** -0.544***

## (0.068) (0.057) (0.077) (0.049)

## unemp 0.010 0.139*** 0.139*** 0.133***

## (0.010) (0.007) (0.009) (0.007)

## distance -0.087***

## (0.012)

## ed.pred 0.647***

## (0.101)

## education 0.647*** 0.005

## (0.136) (0.010)

## Constant 14.061*** -0.359 -0.359 8.641***

## (0.083) (1.412) (1.908) (0.157)

## ------------------------------------------------------------

## Observations 4,739 4,739 4,739 4,739

## R2 0.023 0.117 -0.612 0.110

## Adjusted R2 0.022 0.116 -0.614 0.109

## ============================================================

## Note: *p<0.1; **p<0.05; ***p<0.011.5 General Regression Model (GRM) and robust covariance matrices

The statistical inference presented above relies on strong assumptions regarding the stochastic properties of the errors. Namely, they are assumed to be mutually uncorrelated (Hypothesis 1.4) and homoskedastic (Hypothesis 1.3).

The objective of this section is to present approaches aimed at adjusting the estimate of the covariance matrix of the OLS estimator (\((\mathbf{X}'\mathbf{X})^{-1}s^2\), see Eq. (1.18)), when the previous hypotheses do not hold.

1.5.1 Presentation of the General Regression Model (GRM)

It will prove useful to introduce the following notation: \[\begin{eqnarray} \mathbb{V}ar(\boldsymbol\varepsilon | \mathbf{X}) = \mathbb{E}(\boldsymbol\varepsilon \boldsymbol\varepsilon'| \mathbf{X}) &=& \boldsymbol\Sigma. \tag{1.23} \end{eqnarray}\]

Note that Eq. (1.23) is more general than Hypothesis 1.3 and 1.4 because the diagonal entries of \(\boldsymbol\Sigma\) may be different (as opposed to under Hypothesis 1.3), and the non-diagonal entries of \(\boldsymbol\Sigma\) can be non-null (as opposed to under Hypothesis 1.4).

Definition 1.3 (General Regression Model (GRM)) Hypothesis 1.1 and 1.2, together with Eq. (1.23), form the General Regression Model (GRM) framework.

Naturally, a regression model where Hypotheses 1.1 to 1.4 hold is a specific case of the GRM framework.

The GRM context notably encompasses situations of heteroskedasticity and autocorrelation:

Heteroskedasticity: \[\begin{equation} \boldsymbol\Sigma = \left[ \begin{array}{cccc} \sigma_1^2 & 0 & \dots & 0 \\ 0 & \sigma_2^2 & & 0 \\ \vdots && \ddots& \vdots \\ 0 & \dots & 0 & \sigma_n^2 \end{array} \right]. \tag{1.24} \end{equation}\]

Autocorrelation: \[\begin{equation} \boldsymbol\Sigma = \sigma^2 \left[ \begin{array}{cccc} 1 & \rho_{2,1} & \dots & \rho_{n,1} \\ \rho_{2,1} & 1 & & \vdots \\ \vdots && \ddots& \rho_{n,n-1} \\ \rho_{n,1} & \rho_{n,2} & \dots & 1 \end{array} \right]. \tag{1.25} \end{equation}\]

Example 1.6 (Auto-regressive processes) Autocorrelation is common in time-series contexts (see Section ??). In a time-series context, subscript \(i\) refers to a date.

Assume for instance that: \[\begin{equation} y_i = \mathbf{x}_i' \boldsymbol\beta + \varepsilon_i \tag{1.26} \end{equation}\] with \[\begin{equation} \varepsilon_i = \rho \varepsilon_{i-1} + v_i, \quad v_i \sim \mathcal{N}(0,\sigma_v^2).\tag{1.27} \end{equation}\] In this case, we are in the GRM context, with: \[\begin{equation} \boldsymbol\Sigma =\frac{ \sigma_v^2}{1 - \rho^2} \left[ \begin{array}{cccc} 1 & \rho & \dots & \rho^{n-1} \\ \rho & 1 & & \vdots \\ \vdots && \ddots& \rho \\ \rho^{n-1} & \rho^{n-2} & \dots & 1 \end{array} \right].\tag{1.28} \end{equation}\]

In some cases —in particular when one assumes a parametric formulation for \(\boldsymbol\Sigma\)— one can determine a better (more accurate) estimator than the OLS one. This approach is called Generalized Least Squares (GLS), which we present below.

1.5.2 Generalized Least Squares

Assume \(\boldsymbol\Sigma\) is known (“feasible GLS”). Because \(\boldsymbol\Sigma\) is symmetric positive, it admits a spectral decomposition of the form \(\boldsymbol\Sigma = \mathbf{C} \boldsymbol\Lambda \mathbf{C}'\), where \(\mathbf{C}\) is an orthogonal matrix (i.e. \(\mathbf{C}\mathbf{C}'=Id\)) and \(\boldsymbol\Lambda\) is a diagonal matrix (the diagonal entries are the eigenvalues of \(\boldsymbol\Sigma\)).

We have \(\boldsymbol\Sigma = (\mathbf{P}\mathbf{P}')^{-1}\) with \(\mathbf{P} = \mathbf{C}\boldsymbol\Lambda^{-1/2}\). (We also have \(\mathbf{P}=\boldsymbol\Sigma^{-1/2}\).) Consider the transformed model: \[ \mathbf{P}'\mathbf{y} = \mathbf{P}'\mathbf{X}\boldsymbol\beta + \mathbf{P}'\boldsymbol\varepsilon \quad \mbox{or} \quad \mathbf{y}^* = \mathbf{X}^*\boldsymbol\beta + \boldsymbol\varepsilon^*. \] The variance of \(\boldsymbol\varepsilon^*\) is the identity matrix \(Id\). In the transformed model, OLS is BLUE (Gauss-Markow Theorem 1.1).

The Generalized least squares estimator of \(\boldsymbol\beta\) is: \[\begin{equation} \boxed{\mathbf{b}_{GLS} = (\mathbf{X}'\boldsymbol\Sigma^{-1}\mathbf{X})^{-1}\mathbf{X}'\boldsymbol\Sigma^{-1}\mathbf{y}.}\tag{1.29} \end{equation}\] We have: \[ \mathbb{V}ar(\mathbf{b}_{GLS}|\mathbf{X}) = (\mathbf{X}'\boldsymbol\Sigma^{-1}\mathbf{X})^{-1}. \]

However, in general, \(\boldsymbol\Sigma\) is unknown. The GLS estimator is then said to be infeasible. Some structure is required. Assume \(\boldsymbol\Sigma\) admits a parametric form \(\boldsymbol\Sigma(\theta)\). The estimation becomes feasible (FGLS) if one replaces \(\boldsymbol\Sigma(\theta)\) by \(\boldsymbol\Sigma(\hat\theta)\), where \(\hat\theta\) is a consistent estimator of \(\theta\). In that case, the FGLS is asymptotically efficient (see Example 1.7).

When \(\boldsymbol\Sigma\) has no obvious structure: the OLS (or IV) is the only estimator available. Under regularity assumptions, it remains unbiased, consistent, and asymptotically normally distributed, but not efficient. Standard inference procedures are no longer appropriate.

Example 1.7 (GLS in the auto-correlation case) Consider the case presented in Example 1.6. Because the OLS estimate \(\mathbf{b}\) of \(\boldsymbol\beta\) is consistent, the estimates \(e_i\) of the \(\varepsilon_i\)’s also are. Consistent estimators of \(\rho\) and \(\sigma_v\) are then obtained by regressing the \(e_i\)’s on the \(e_{i-1}\)’s. Using these estimates in Eq. (1.28) provides a consistent estimate of \(\boldsymbol\Sigma\). Applying these steps recursively gives an efficient estimator of \(\boldsymbol\beta\) (Cochrane and Orcutt (1949)).

1.5.3 Asymptotic properties of the OLS estimator in the GRM framework

Since \(\mathbf{b} = \boldsymbol\beta + \left(\mathbf{X}'\mathbf{X}\right)^{-1} \mathbf{X}'\boldsymbol\varepsilon\) and \(\mathbb{V}ar(\boldsymbol\varepsilon|\mathbf{X})=\boldsymbol\Sigma\), we have: \[\begin{equation} \mathbb{V}ar(\mathbf{b}|\mathbf{X}) = \frac{1}{n}\left(\frac{1}{n}\mathbf{X}'\mathbf{X}\right)^{-1}\left(\frac{1}{n}\mathbf{X}'\boldsymbol\Sigma\mathbf{X}\right)\left(\frac{1}{n}\mathbf{X}'\mathbf{X}\right)^{-1}.\tag{1.30} \end{equation}\]

Therefore, the conditional covariance matrix of the OLS estimator is not \(\sigma^2 (\mathbf{X}'\mathbf{X})^{-1}\) any longer, and using \(s^2 (\mathbf{X}'\mathbf{X})^{-1}\) for inference may be misleading. Below, we will see how to construct appropriate estimates of the covariance matrix of \(\mathbf{b}\). Before that, let us prove that the OLS estimator remains consistent in the GRM framework.

Proposition 1.13 (Consistency of the OLS estimator in the GRM framework) If \(\mbox{plim }(\mathbf{X}'\mathbf{X}/n)\) and \(\mbox{plim }(\mathbf{X}'\boldsymbol\Sigma\mathbf{X}/n)\) are finite positive definite matrices, then \(\mbox{plim }(\mathbf{b})=\boldsymbol\beta\).

Proof. We have \(\mathbb{V}ar(\mathbf{b})=\mathbb{E}[\mathbb{V}ar(\mathbf{b}|\mathbf{X})]+\mathbb{V}ar[\mathbb{E}(\mathbf{b}|\mathbf{X})]\). Since \(\mathbb{E}(\mathbf{b}|\mathbf{X})=\boldsymbol\beta\), \(\mathbb{V}ar[\mathbb{E}(\mathbf{b}|\mathbf{X})]=0\). Eq. (1.30) implies that \(\mathbb{V}ar(\mathbf{b}|\mathbf{X}) \rightarrow 0\). Hence \(\mathbf{b}\) converges in mean square, and therefore in probability (see Prop. 8.13).

Prop. 1.14 gives the asymptotic distribution of the OLS estimator in the GRM framework.

Proposition 1.14 (Asymptotic distribution of the OLS estimator in the GRM framework) If \(Q_{xx}=\mbox{plim }(\mathbf{X}'\mathbf{X}/n)\) and \(Q_{x\boldsymbol\Sigma x}=\mbox{plim }(\mathbf{X}'\boldsymbol\Sigma\mathbf{X}/n)\) are finite positive definite matrices, then: \[ \sqrt{n}(\mathbf{b}-\boldsymbol\beta) \overset{d}{\rightarrow} \mathcal{N}(0,Q_{xx}^{-1}Q_{x\boldsymbol\Sigma x}Q_{xx}^{-1}). \]

The IV estimator also features a normal asymptotic distribution:

Proposition 1.15 (Asymptotic distribution of the IV estimator in the GRM framework) If regressors and IV variables are “well-behaved”, then: \[ \mathbf{b}_{iv} \overset{a}{\sim} \mathcal{N}(\boldsymbol\beta,\mathbf{V}_{iv}), \] where \[ \mathbf{V}_{iv} = \frac{1}{n}(\mathbf{Q}^*)\mbox{ plim }\left(\frac{1}{n} \mathbf{Z}'\boldsymbol\Sigma \mathbf{Z}\right)(\mathbf{Q}^*)', \] with \[ \mathbf{Q}^* = [\mathbf{Q}_{xz}\mathbf{Q}_{zz}^{-1}\mathbf{Q}_{zx}]^{-1}\mathbf{Q}_{xz}\mathbf{Q}_{zz}^{-1}. \]

For practical purposes, one needs to have estimates of \(\boldsymbol\Sigma\) in Props. 1.14 or 1.15. The complication comes from the fact that \(\boldsymbol\Sigma\) is of dimension \(n \times n\), and its estimation —based on a sample of length \(n\)— is therefore infeasible in the general case. Notwithstanding, looking at Eq. (1.30), it appears that one can focus on the estimation of \(Q_{x\boldsymbol\Sigma x}=\mbox{plim }(\mathbf{X}'\boldsymbol\Sigma\mathbf{X}/n)\) (or \(\mbox{plim }\left(\frac{1}{n} \mathbf{Z}'\boldsymbol\Sigma \mathbf{Z}\right)\) in the IV case). This matrix being of dimension \(K \times K\), its estimation is easier.

We have: \[\begin{equation} \frac{1}{n}\mathbf{X}'\boldsymbol\Sigma\mathbf{X} = \frac{1}{n}\sum_{i=1}^{n}\sum_{j=1}^{n}\sigma_{i,j}\mathbf{x}_i\mathbf{x}'_j. \tag{1.31} \end{equation}\]

The so-called robust covariance matrices are estimates of the previous matrix. Their computation is based on the fact that if \(\mathbf{b}\) is consistent, then the \(e_i\)’s are consistent estimators of the \(\varepsilon_i\)’s.

In the following sections (1.5.4 and 1.5.5), we present two types of robust covariance matrices.

1.5.4 HAC-robust covariance matrices

When only heteroskedasticity prevails, i.e., when matrix \(\boldsymbol\Sigma\) is as in Eq. (1.24), then one can use the formula proposed by White (1980) to estimate \(\frac{1}{n}\mathbf{X}'\boldsymbol\Sigma\mathbf{X}\) (see Example 1.8). When the residuals feature both heteroskedasticity and auto-correlation, then one can use the Newey and West (1987) approach (see Example 1.9).

Example 1.8 (Heteroskedasticity) This is the case of Eq. (1.24). We have \(\sigma_{i,j}=0\) for \(i \ne j\). Hence, in this case, we then need to estimate \(\frac{1}{n}\sum_{i=1}^{n}\sigma_{i}^2\mathbf{x}_i\mathbf{x}'_i\). White (1980) has shown that, under general conditions: \[\begin{equation} \mbox{plim}\left( \frac{1}{n}\sum_{i=1}^{n}\sigma_{i}^2\mathbf{x}_i\mathbf{x}'_i \right) = \mbox{plim}\left( \frac{1}{n}\sum_{i=1}^{n}e_{i}^2\mathbf{x}_i\mathbf{x}'_i \right). \tag{1.32} \end{equation}\] The estimator of \(\frac{1}{n}\mathbf{X}'\boldsymbol\Sigma\mathbf{X}\) therefore is: \[\begin{equation} M_{HC0} = \frac{1}{n}\mathbf{X}' \left[ \begin{array}{cccc} e_1^2 & 0 & \dots & 0 \\ 0 & e_2^2 & \\ \vdots & & \ddots&0 \\ 0 & \dots & 0 & e_n^2 \end{array} \right] \mathbf{X}.\tag{1.33} \end{equation}\] where the \(e_i\) are the OLS residuals of the regression. The previous estimator is often called HC0. The HC1 estimator, due to J. MacKinnon and White (1985), is obtained by applying an adjustment factor \(n/(n-K)\) for the number of degrees of freedom (as in Prop. 1.6). That is: \[\begin{equation} M_{HC1} = \frac{n}{n-K}M_{HC0}.\tag{1.34} \end{equation}\]

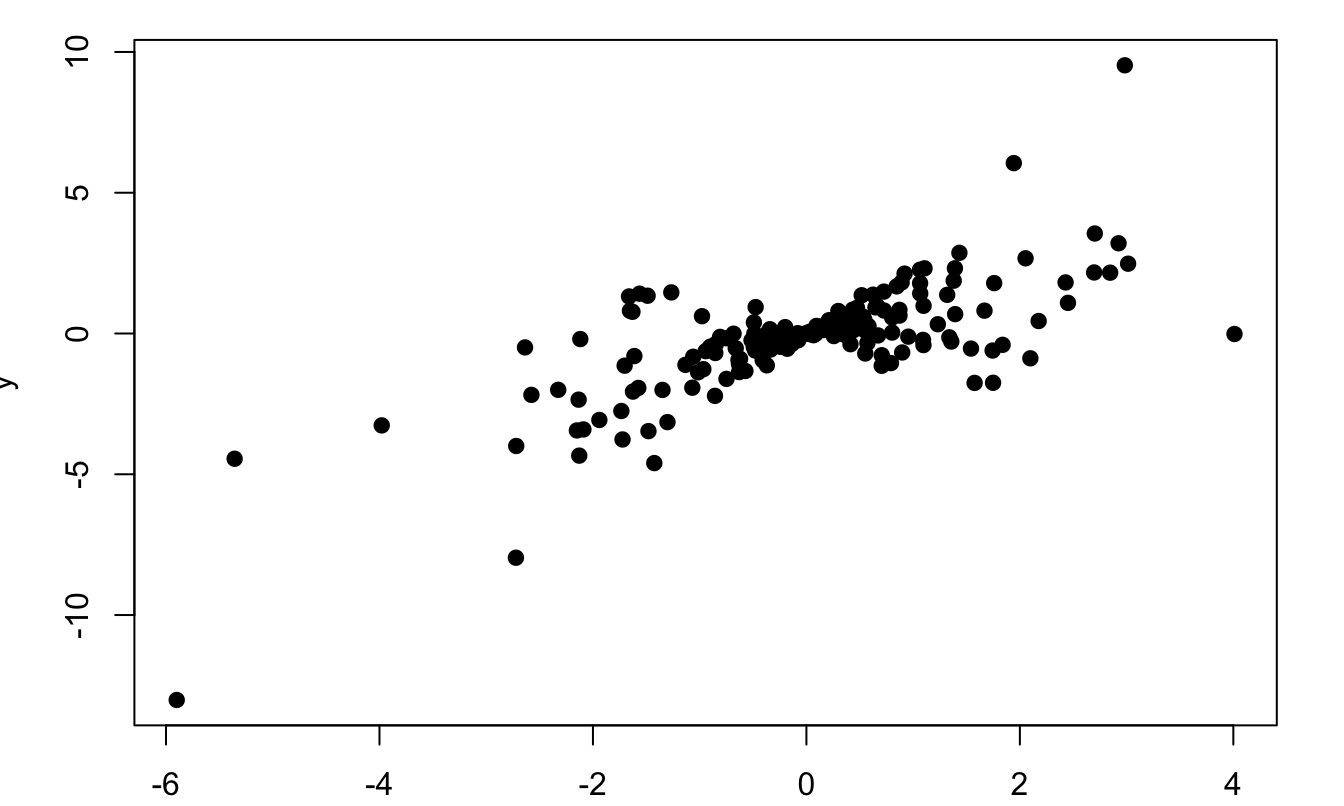

We can illustrate the influence of heteroskedasticity using simulations. Consider the following model: \[ y_i = x_i + \varepsilon_i, \quad \varepsilon_i \sim \mathcal{N}(0,x_i^2), \] where the \(x_i\)’s are i.i.d. \(t(6)\).

Here is a simulated sample (\(n=200\)) of this model:

Figure 1.6: Situation of heteroskedasticity. The model is \(y_i = x_i + \varepsilon_i, \quad \varepsilon_i \sim \mathcal{N}(0,x_i^2)\), where the \(x_i\)’s are i.i.d. \(t(6)\).

We simulate 1000 samples of the same model with \(n=200\). For each sample, we compute the OLS estimate of \(\beta\) (\(=1\)). For each of the 1000 OLS estimations, we employ (a) the standard OLS variance formula (\(s^2 (\mathbf{X}'\mathbf{X})^{-1}\)) and (b) the White formula to estimate the variance of \(b\). For each formula, we compute the average of the 1000 resulting standard deviations and compare these with the standard deviation of the 1000 OLS estimate of \(\beta\).

n <- 200 # sample size

N <- 1000 # number of simulated samples

XX <- matrix(rt(n*N,df=6),n,N)

YY <- matrix(XX + XX*rnorm(n),n,N)

all_b <- NULL;all_V_OLS <- NULL;all_V_White <- NULL

for(j in 1:N){

Y <- matrix(YY[,j],ncol=1)

X <- matrix(XX[,j],ncol=1)

b <- solve(t(X)%*%X) %*% t(X)%*%Y

e <- Y - X %*% b

S <- 1/n * t(X) %*% diag(c(e^2)) %*% X

V_OLS <- solve(t(X)%*%X) * var(e)

V_White <- 1/n * (solve(1/n*t(X)%*%X)) %*% S %*% (solve(1/n*t(X)%*%X))

all_b <- c(all_b,b)

all_V_OLS <- c(all_V_OLS,V_OLS)

all_V_White <- c(all_V_White,V_White)

}

c(sd(all_b),mean(sqrt(all_V_OLS)),mean(sqrt(all_V_White)))## [1] 0.14024423 0.06748804 0.13973431The results show that the White formula yields, on average, an estimated standard deviation that is much closer to the “true” value than the standard OLS formula. The latter underestimate the standard deviation of \(b\).

In the following example, we regress GDP growth rates from the Jordà, Schularick, and Taylor (2017) database on a systemic financial crisis dummy. We compute the HC0- and HC1-based standard deviations of the parameter estimate, and compare it to the one based on the standard OLS formula. The adjusted standard deviations are close to the one provided by the non-adjusted OLS formula.

library(lmtest)

library(sandwich)

nT <- dim(JST)[1]

JST$growth <- NaN

JST$growth[2:nT] <- log(JST$rgdpbarro[2:nT]/JST$rgdpbarro[1:(nT-1)])

JST.red <- subset(JST,year>1950)

JST.red$iso <- as.factor(JST.red$iso)

JST.red$year <- as.factor(JST.red$year)

eq <- lm(growth~crisisJST+iso+year,data=JST.red)

vcovHC0 <- vcovHC(eq, type = "HC0")

vcovHC1 <- vcovHC(eq, type = "HC1")

stargazer::stargazer(eq, eq, eq,type = "text",

column.labels = c("No HAC", "HC0","HC1"),

omit = c("iso","year"),no.space = TRUE,keep.stat = "n",

se = list(NULL,sqrt(diag(vcovHC0)),sqrt(diag(vcovHC1))))##

## ==========================================

## Dependent variable:

## -----------------------------

## growth

## No HAC HC0 HC1

## (1) (2) (3)

## ------------------------------------------

## crisisJST -0.015*** -0.015*** -0.015***

## (0.005) (0.005) (0.006)

## Constant 0.042*** 0.042*** 0.042***

## (0.005) (0.007) (0.007)

## ------------------------------------------

## Observations 1,258 1,258 1,258

## ==========================================

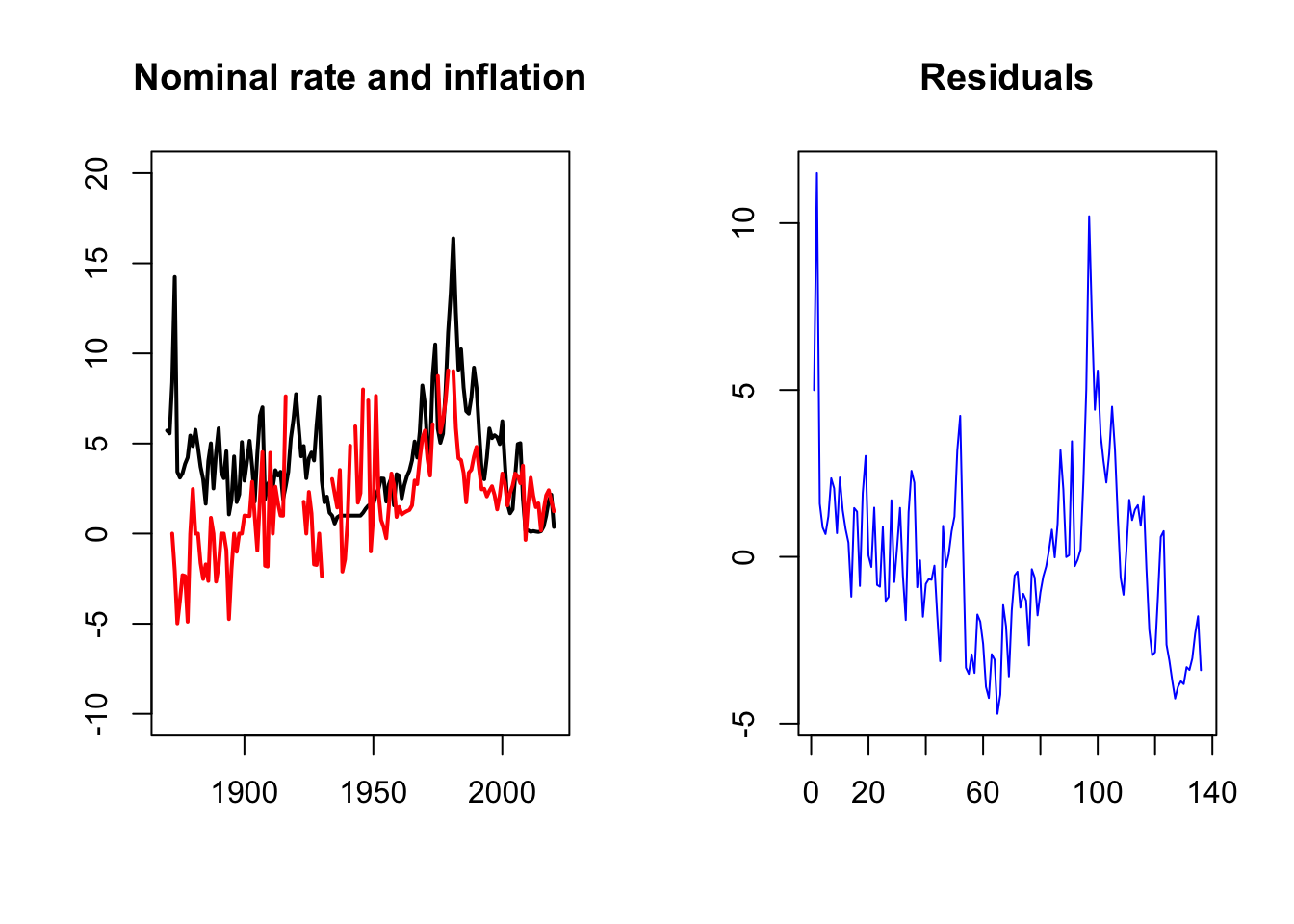

## Note: *p<0.1; **p<0.05; ***p<0.01Example 1.9 (Heteroskedasticity and Autocorrelation (HAC)) Newey and West (1987) have proposed a formula to address both heteroskedasticity and auto-correlation of the residuals (Eqs. (1.24) and (1.25)). They show that, if the correlation between terms \(i\) and \(j\) gets sufficiently small when \(|i-j|\) increases: \[\begin{eqnarray} &&\mbox{plim} \left( \frac{1}{n}\sum_{i=1}^{n}\sum_{j=1}^{n}\sigma_{i,j}\mathbf{x}_i\mathbf{x}'_j \right) \approx \\ &&\mbox{plim} \left( \frac{1}{n}\sum_{t=1}^{n}e_{t}^2\mathbf{x}_t\mathbf{x}'_t + \frac{1}{n}\sum_{\ell=1}^{L}\sum_{t=\ell+1}^{n}w_\ell e_{t}e_{t-\ell}(\mathbf{x}_t\mathbf{x}'_{t-\ell} + \mathbf{x}_{t-\ell}\mathbf{x}'_{t}) \right), \nonumber \tag{1.35} \end{eqnarray}\] where \(w_\ell = 1 - \ell/(L+1)\) (with \(L\) large).